Introduction to JavaScript Speech Recognition

Speech recognition has rapidly transformed web application interfaces, ushering in a new era of hands-free, accessible, and intuitive user experiences. With JavaScript speech recognition, developers can empower their apps to understand spoken commands, transcribe speech to text, and provide features previously reserved for native platforms. As digital accessibility and voice-driven workflows grow in importance, JavaScript’s ability to interface with the Web Speech API is increasingly vital for modern web development.

The Web Speech API, with expanding browser support in 2025, enables seamless integration of both speech-to-text (recognition) and text-to-speech (synthesis) in JavaScript applications. Whether for voice commands, dictation, or accessibility enhancements, JavaScript speech recognition is a cornerstone of next-generation web applications.

Understanding the Web Speech API for JavaScript Speech Recognition

The Web Speech API provides web developers with powerful interfaces for both recognizing speech (SpeechRecognition) and generating speech (SpeechSynthesis). With these tools, voice-driven web experiences are more attainable than ever. For developers looking to add real-time communication features alongside speech recognition, integrating a

javascript video and audio calling sdk

can further enhance interactive capabilities.What is the Web Speech API?

The Web Speech API is a W3C specification designed to bring speech recognition and synthesis to web browsers via JavaScript. It comprises two main interfaces:

- SpeechRecognition: Converts spoken language into text in real-time.

- SpeechSynthesis: Converts text into spoken audio.

In addition to these, developers interested in building voice-enabled chat or conferencing applications can explore a robust

Voice SDK

to power live audio rooms and collaborative features.SpeechRecognition vs SpeechSynthesis

| Feature | SpeechRecognition | SpeechSynthesis |

|---|---|---|

| Purpose | Speech-to-text | Text-to-speech |

| Main Use Cases | Dictation, voice commands | Accessibility, narration |

| Browser Interface | window.SpeechRecognition | window.speechSynthesis |

For projects requiring both voice recognition and real-time communication, combining the Web Speech API with a

javascript video and audio calling sdk

can provide a seamless user experience.Browser Compatibility and Limitations

As of 2025, Chrome, Edge, and some versions of Safari offer robust support for the SpeechRecognition interface (often via

webkitSpeechRecognition). Firefox and some mobile browsers have partial or no support. Always check current compatibility tables

before implementation. If your application requires fallback options or additional voice features, consider integrating aVoice SDK

for broader compatibility.API Architecture Overview

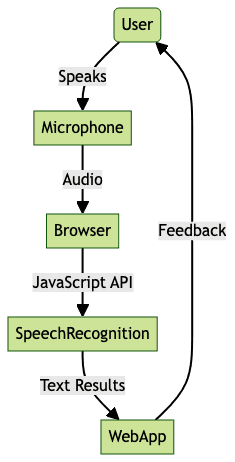

How JavaScript Speech Recognition Works

JavaScript speech recognition leverages the SpeechRecognition interface to convert live spoken input into text, enabling real-time or command-based interactions. For applications that need to support both speech recognition and real-time communication, using a

javascript video and audio calling sdk

can streamline development.High-Level Workflow

- User grants microphone access

- SpeechRecognition captures audio input

- Audio is processed (locally or in the cloud, depending on browser implementation)

- Recognized text is delivered to the JavaScript application

If you want to add phone call capabilities to your web app alongside speech recognition, integrating a

phone call api

can be a practical solution.SpeechRecognition Interface Explained

The SpeechRecognition interface (or

webkitSpeechRecognition) is the core object for speech-to-text in JavaScript. It exposes properties and events for controlling the recognition process, handling results, and managing errors. For developers aiming to embed video communication features, an embed video calling sdk

can be easily integrated with speech recognition workflows.Permissions and User Consent

Modern browsers require explicit user permission to access the microphone. The site must be served over HTTPS, and the user is prompted to allow or deny access. This is a critical privacy control. For secure and scalable video conferencing, consider leveraging a

Video Calling API

that complements your speech recognition features.Privacy & Security Concerns

- Audio data may be sent to cloud services for processing (browser-dependent)

- Always inform users when speech data is being captured

- Never store or transmit speech data without user consent

Setting Up JavaScript Speech Recognition

Implementing JavaScript speech recognition is straightforward, but requires careful handling of permissions, events, and browser inconsistencies. For a quick start with both speech and video/audio calling, check out a

javascript video and audio calling sdk

to accelerate your development process.Basic Example: Initializing and Using SpeechRecognition

1// Check for browser support

2const SpeechRecognition = window.SpeechRecognition || window.webkitSpeechRecognition;

3if (!SpeechRecognition) {

4 alert("Speech recognition not supported in this browser.");

5} else {

6 const recognition = new SpeechRecognition();

7 recognition.lang = 'en-US';

8 recognition.onresult = (event) => {

9 const transcript = event.results[0][0].transcript;

10 console.log("Recognized:", transcript);

11 };

12 recognition.onerror = (event) => {

13 console.error("Speech recognition error:", event.error);

14 };

15 // Start recognition

16 recognition.start();

17 // To stop: recognition.stop();

18}

19Explanation:

- Checks for API support

- Initializes a recognition instance

- Handles results and errors

- Starts listening for speech

Streaming Results and Continuous Recognition

For real-time transcription or hands-free operation, use

interimResults and continuous:1const recognition = new (window.SpeechRecognition || window.webkitSpeechRecognition)();

2recognition.interimResults = true; // Get partial (interim) results

3recognition.continuous = true; // Keep listening after speech ends

4

5recognition.onresult = (event) => {

6 let interim = '';

7 let final = '';

8 for (let i = event.resultIndex; i < event.results.length; ++i) {

9 if (event.results[i].isFinal) {

10 final += event.results[i][0].transcript;

11 } else {

12 interim += event.results[i][0].transcript;

13 }

14 }

15 document.getElementById('output').textContent = final + ' ' + interim;

16};

17recognition.start();

18Use Case: Real-time meeting transcription, live chat input, or voice-controlled interfaces. If you want to try these capabilities in your own projects,

Try it for free

and explore the possibilities.Customizing JavaScript Speech Recognition

JavaScript speech recognition is highly customizable, supporting multiple languages, accents, and grammar rules for precise control. For developers seeking to add advanced communication features, integrating a

javascript video and audio calling sdk

can provide a comprehensive solution for both speech and media handling.Language and Accents

You can set the

lang property to target specific languages or dialects, improving recognition accuracy for global users.1const recognition = new (window.SpeechRecognition || window.webkitSpeechRecognition)();

2recognition.lang = 'es-ES'; // Spanish (Spain)

3recognition.onresult = (event) => {

4 console.log("Spanish transcript:", event.results[0][0].transcript);

5};

6recognition.start();

7Tip: Always match the language setting to your user base and UI locale to maximize accuracy. For more robust voice-driven experiences, consider combining speech recognition with a

Voice SDK

to enable interactive audio rooms and group conversations.Managing Grammar and Alternatives

Advanced use cases can leverage the SpeechGrammarList to prioritize expected phrases or command sets, reducing ambiguity:

1const SpeechGrammarList = window.SpeechGrammarList || window.webkitSpeechGrammarList;

2const recognition = new (window.SpeechRecognition || window.webkitSpeechRecognition)();

3const grammar = '#JSGF V1.0; grammar colors; public <color> = red | green | blue ;';

4const speechRecognitionList = new SpeechGrammarList();

5speechRecognitionList.addFromString(grammar, 1);

6recognition.grammars = speechRecognitionList;

7recognition.onresult = (event) => {

8 console.log("Color recognized:", event.results[0][0].transcript);

9};

10recognition.start();

11Handling Multiple Alternatives:

1recognition.maxAlternatives = 3;

2recognition.onresult = (event) => {

3 for (let i = 0; i < event.results[0].length; i++) {

4 console.log("Alternative", i, ":", event.results[0][i].transcript);

5 }

6};

7Practical Use Cases for JavaScript Speech Recognition

JavaScript speech recognition unlocks a range of innovative web experiences, including:

- Voice Commands: Control page navigation, trigger actions, or operate smart UI components hands-free.

- Dictation and Note-Taking: Enable users to transcribe speech for documents, messages, or forms without typing.

- Accessibility Enhancements: Assist users with disabilities by providing alternative input methods.

- Voice-Driven Navigation: Navigate web pages or applications using spoken directions.

In 2025, these capabilities are increasingly standard in productivity suites, smart home dashboards, and educational tools. For seamless integration of video and audio features, an

embed video calling sdk

can help you quickly add conferencing to your voice-enabled applications.Security and Privacy Considerations for JavaScript Speech Recognition

Speech recognition in the browser requires explicit user consent and secure handling of sensitive data. Always implement the following practices:

- Use HTTPS to ensure microphone access and data security

- Clearly inform users when audio is being recorded or transmitted

- Understand that some browsers process audio in the cloud, which may raise privacy considerations

- Do not store or share audio/transcripts without explicit permission

Limitations and Best Practices for JavaScript Speech Recognition

While JavaScript speech recognition is powerful, it comes with some limitations:

- Browser Support: Not all browsers fully support the API—test across platforms

- Accuracy: Background noise, accents, and poor microphones can reduce recognition rate

- Best Practices: Always provide fallback input methods, inform users about recording, and allow manual correction of transcripts

For developers who want to combine speech recognition with real-time communication, leveraging a

javascript video and audio calling sdk

ensures your application is ready for the future of web interaction.Conclusion

JavaScript speech recognition, powered by the Web Speech API, is a game-changer for modern, accessible, and hands-free web applications. As browser support grows in 2025, the possibilities for voice-driven interfaces will only expand. If you're ready to build next-generation voice and video experiences,

Try it for free

and start exploring today.Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ