Introduction to LLM for Customer Support

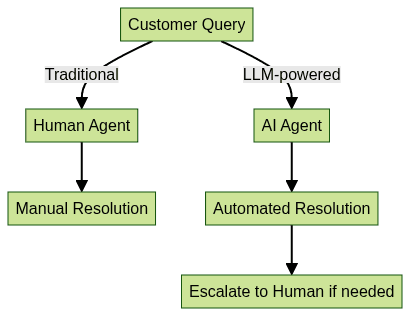

Large Language Models (LLMs) have emerged as game-changers in the customer support landscape. Leveraging deep learning and generative AI, LLMs like GPT-4 and open-source alternatives are now capable of understanding and generating human-like responses across diverse customer service scenarios. As businesses strive to deliver faster, more personalized, and scalable support, the adoption of AI-powered solutions has become critical.

AI and automation in customer support are no longer futuristic concepts—they are essential for streamlining workflows, reducing costs, and delivering superior customer experiences. LLMs not only automate repetitive queries but also enable support agents to focus on more complex tasks. The result: higher customer satisfaction, efficient ticket resolution, and a transformed support ecosystem.

How LLMs Transform Customer Support

What is an LLM and How Does it Work for Support?

A Large Language Model (LLM) is a neural network trained on massive datasets to generate and understand text. In customer support, LLMs interpret customer queries, recognize intent, and craft contextual, relevant responses. Unlike rule-based chatbots, LLMs provide nuanced answers, handle ambiguous questions, and learn from ongoing interactions.

Key Benefits of LLM for Customer Support

- Faster Resolution: Automate common inquiries and streamline ticket triage.

- Accuracy: Reduce human error and deliver precise information by referencing integrated knowledge bases.

- Scalability: Handle high query volumes without a proportional increase in staffing.

- Cost Reduction: Lower operational costs by automating repetitive support tasks.

In addition to text-based automation, integrating a

Video Calling API

can further enhance customer support by enabling real-time face-to-face communication for complex issues.Real-world Impact: Case Studies & Metrics

Organizations deploying LLMs have reported:

- 30-50% reduction in first response times

- 40%+ cost savings on tier-1 support

- Significant CSAT score improvements

LLM-powered workflows enable faster resolutions, escalations only when necessary, and a seamless support experience. For businesses seeking to add audio capabilities, integrating a

Voice SDK

can provide high-quality voice interactions alongside AI-driven support.Core Features of LLMs for Customer Support

Omnichannel Automation

Modern LLMs power customer support across all channels: live chat, voice, email, and social media platforms. This ensures a unified customer experience and enables seamless handoffs between channels without losing context. For organizations looking to implement video and audio solutions, options like a

python video and audio calling sdk

can be seamlessly integrated into support workflows.Personalized, Contextual Responses

By leveraging customer profiles and interaction history, LLMs generate responses tailored to individual needs. This context-awareness enables more empathetic and helpful interactions, improving customer satisfaction and loyalty. For teams seeking quick deployment, an

embed video calling sdk

allows you to add video calling features directly into your support platform.Integration with Knowledge Bases

LLMs can retrieve and synthesize information from internal documentation, FAQs, and product manuals in real-time. This capability ensures that customers and agents receive the most relevant, up-to-date answers without manual searching. For live support scenarios, leveraging a

Live Streaming API SDK

can enable interactive product demos or troubleshooting sessions.Multilingual & Brand-aligned Interactions

State-of-the-art LLMs support multilingual conversations and can be fine-tuned to match a company’s tone of voice, terminology, and brand guidelines. This alignment ensures consistency and professionalism across every customer touchpoint. If your support stack is built with JavaScript, integrating a

javascript video and audio calling sdk

can further enhance your omnichannel capabilities.Implementation Guide: Deploying LLM for Customer Support

Choosing the Right LLM Platform

- Open-source: Solutions like Llama 2 or Falcon offer flexibility and control over data, ideal for organizations with strong ML teams.

- Proprietary: Platforms such as OpenAI, Google, or Anthropic provide managed infrastructure, frequent updates, and enterprise support.

- Selection Criteria: Consider scalability, privacy, language support, integration capabilities, and cost.

When evaluating communication channels, consider adding a

phone call api

to support customers who prefer direct voice interactions.Integrating with Existing Workflows

LLMs are typically integrated into help desks, CRMs, and ticketing systems via APIs or SDKs. Below is a sample Python snippet demonstrating integration with a support ticketing system:

1import requests

2

3def create_support_ticket_llm(api_url, auth_token, customer_query):

4 payload = {

5 "query": customer_query,

6 "source": "llm-integration"

7 }

8 headers = {

9 "Authorization": f"Bearer {auth_token}",

10 "Content-Type": "application/json"

11 }

12 response = requests.post(api_url, json=payload, headers=headers)

13 return response.json()

14

15# Usage

16result = create_support_ticket_llm(

17 api_url="https://support.example.com/api/tickets",

18 auth_token="YOUR_API_TOKEN",

19 customer_query="My app keeps crashing on launch."

20)

21print(result)

22For teams using React Native, a

react native video and audio calling sdk

can be easily integrated to provide seamless in-app communication.Feeding Internal Knowledge Bases

For optimal performance, LLMs require well-structured, up-to-date internal knowledge bases. Best practices include:

- Cleaning and standardizing data formats

- Annotating documents for intent recognition

- Regularly updating content to reflect product changes

Security & Compliance Considerations

AI-driven support must prioritize data privacy and compliance:

- GDPR: Ensure customer data rights and consent mechanisms

- SOC2: Maintain strict controls over data access and processing

- PII Masking: Mask sensitive information in both training and production environments

- Audit Trails: Log LLM interactions for transparency and troubleshooting

Advanced LLM Use Cases in Customer Support

Technical Support Automation

LLMs can autonomously resolve technical queries, from troubleshooting steps to advanced diagnostics. By integrating with internal APIs and knowledge bases, LLMs provide actionable solutions, reducing escalation rates. For businesses aiming to offer advanced support, integrating a

Video Calling API

can facilitate direct visual troubleshooting and collaboration.Sentiment Analysis & CSAT Improvement

LLMs analyze customer sentiment in real-time, enabling proactive responses to dissatisfaction and identification of improvement areas. This drives higher CSAT (Customer Satisfaction) scores and fosters customer loyalty.

Proactive Support & Insights Generation

AI-powered help desks can anticipate customer issues based on usage patterns, sending alerts or support before problems are reported. LLMs also generate actionable insights for product and service enhancements.

Sentiment Analysis Code Example

1from transformers import pipeline

2

3# Load pre-trained sentiment-analysis pipeline

4sentiment_pipeline = pipeline("sentiment-analysis")

5

6customer_message = "I\'m frustrated because my order hasn\'t arrived yet."

7result = sentiment_pipeline(customer_message)

8print(result) # Output: [{'label': 'NEGATIVE', 'score': 0.98}]

9Challenges and Best Practices

Common Pitfalls (Hallucinations, Data Quality)

LLMs may generate plausible but incorrect answers (hallucinations) if knowledge bases are outdated or poorly structured. Ensuring high-quality, relevant data is critical.

Ensuring Accuracy and Quality Control

Implement multi-step validation pipelines:

- Human-in-the-loop review for complex queries

- Automated checks against authoritative sources

- Feedback collection from users for continuous tuning

Continuous Learning and Feedback Loops

Regularly retrain LLMs with recent support transcripts and customer feedback. Feedback loops ensure your AI agent adapts to changing products, policies, and customer expectations, maintaining high accuracy and relevance.

Future Trends: LLM and the Evolution of Customer Support

The future of customer support in 2025 and beyond will be defined by predictive AI, deeper personalization, and seamless agent augmentation. LLMs will anticipate customer needs, automate increasingly complex tasks, and empower human agents with real-time insights, transforming support from reactive to proactive and strategic.

Conclusion

LLMs are redefining customer support by enabling scalable, efficient, and deeply personalized service. Organizations that leverage generative AI and LLM integration stand to achieve higher customer satisfaction, streamlined workflows, and lasting competitive advantage. If you're ready to enhance your support capabilities with AI-powered communication,

Try it for free

and experience the next generation of customer support solutions.Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ