Introduction to LLM Examples for Voice Agent

Large Language Models (LLMs) have become foundational in powering next-generation voice agents and assistants. As we enter 2025, the fusion of conversational AI with voice technology is redefining how users interact with software, devices, and digital services. LLMs bring advanced natural language understanding (NLU), context awareness, and dialogue management to voice-based systems, enabling more human-like and effective conversations.

This article delves into LLM examples for voice agent development, covering the essential components, top frameworks, hands-on code samples, and best practices. Whether you are building a real-time LLM-powered voice bot, exploring open-source voice assistants, or seeking to optimize voice UX, this guide will help you harness the latest advances in voice AI development.

What is a Voice Agent Powered by LLM?

A voice agent is an intelligent software application that enables interactive voice communication with users. Powered by LLMs, these agents can comprehend, process, and generate human-like responses, going far beyond traditional rule-based bots.

Large Language Models—such as Llama 3 or GPT—analyze vast amounts of text data, enabling improved conversational and contextual understanding. When integrated with Speech-to-Text (STT) and Text-to-Speech (TTS) technologies, LLMs can handle natural voice input and output, delivering seamless conversational AI experiences. For developers looking to enable real-time voice features, integrating a

Voice SDK

can streamline the process of adding live audio capabilities to your applications.Use Cases:

- Customer Support: Automate natural conversations for troubleshooting, booking, and inquiries.

- Smart Assistants: Power home automation, scheduling, and information retrieval.

- Accessibility Tools: Enable voice-driven navigation and content consumption for diverse users.

With the increasing demand for personalized, efficient, and accessible digital experiences, LLM examples for voice agent development are at the forefront of innovation in 2025.

Key Components of LLM-Based Voice Agents

Building effective LLM-powered voice agents requires integrating several core technologies:

Speech-to-Text (STT)

STT engines convert spoken language into text, acting as the bridge between user voice input and LLM processing. Popular STT solutions include Google Speech-to-Text, DeepSpeech, and Whisper. When building telephony-based solutions, leveraging a robust

phone call api

can help facilitate seamless voice interactions over the phone.Large Language Model (LLM)

The LLM (e.g., Llama 3, GPT-4) interprets the transcribed text, manages dialogue state, and generates contextually appropriate responses.

Text-to-Speech (TTS)

TTS systems convert the output text from the LLM back into natural-sounding speech. Advanced TTS engines like Amazon Polly or OpenAI's TTS offer diverse voices, accents, and customization.

State Management and Memory

To provide coherent and context-aware conversations, voice agents must retain dialogue state and user context across turns. State management can be handled with in-memory objects or external databases.

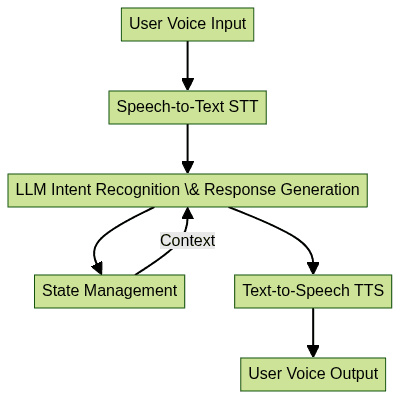

Architecture Diagram

This architecture showcases the pipeline from user speech, through LLM processing, to synthesized voice output—highlighting the interplay of STT, LLM, TTS, and stateful logic in LLM examples for voice agent systems. For those looking to add both video and audio calling features, a

javascript video and audio calling sdk

offers a quick way to get started with cross-platform communication.Popular LLMs and Frameworks for Voice Agents

In 2025, several powerful LLMs and development frameworks are available for building voice agents:

- Llama 3: Open-source, efficient, and highly customizable for private deployments.

- GPT (OpenAI): Industry-leading conversational capabilities, available via API.

- DiVA Llama 3: A fine-tuned Llama 3 variant designed for dialogue and voice applications.

Open-Source vs Commercial:

- Open-source LLMs (like Llama 3) offer transparency, self-hosting, and community-driven improvements.

- Commercial APIs (such as OpenAI's GPT) provide ease of use, scalability, and robust support.

Voice Agent SDKs and Tools:

Rasa Open Source

Picovoice

Coqui AI

OpenAI Whisper (STT)

Mozilla TTS

- For rapid deployment of video-enabled voice agents, consider using an

embed video calling sdk

to integrate prebuilt communication modules directly into your app.

These frameworks, models, and SDKs are essential for developers seeking practical LLM examples for voice agent projects.

Practical LLM Examples for Voice Agents

Let's explore hands-on LLM examples for voice agent implementation using TypeScript—a popular choice for full-stack and cross-platform voice AI development. If your use case involves mobile platforms, a

react native video and audio calling sdk

can help you build rich communication features for iOS and Android apps.Example 1: Simple Conversational Voice Agent (TypeScript)

This basic example demonstrates how to connect STT, an LLM API, and TTS to create a conversational voice agent.

1// Import required modules

2import { transcribeAudio } from "./stt";

3import { queryLLM } from "./llm";

4import { synthesizeSpeech } from "./tts";

5

6async function voiceAgent(audioInput: Buffer): Promise<void> {

7 // Step 1: Convert speech to text

8 const userText = await transcribeAudio(audioInput);

9

10 // Step 2: Send text to LLM for response

11 const llmResponse = await queryLLM(userText);

12

13 // Step 3: Convert response to speech

14 const speechOutput = await synthesizeSpeech(llmResponse);

15

16 // Step 4: Play synthesized speech (platform-dependent)

17 playAudio(speechOutput);

18}

19Example 2: Stateful RPG Interaction (Pocket Computer, TypeScript)

This advanced pattern demonstrates stateful conversations and voice personalities using prompt engineering:

1// Memory object to track conversation state

2let conversationState = {

3 character: "AI Dungeon Master",

4 quest: "Find the lost artifact",

5 inventory: ["map"],

6};

7

8function buildPrompt(userInput: string): string {

9 return `You are ${conversationState.character}. The quest is: ${conversationState.quest}. Inventory: ${conversationState.inventory.join(", ")}.\nUser says: ${userInput}\nRespond as an RPG guide.`;

10}

11

12async function rpgVoiceAgent(audioInput: Buffer): Promise<void> {

13 const userText = await transcribeAudio(audioInput);

14 const prompt = buildPrompt(userText);

15 const llmResponse = await queryLLM(prompt);

16 const speechOutput = await synthesizeSpeech(llmResponse, {

17 personality: "mysterious",

18 accent: "british"

19 });

20 playAudio(speechOutput);

21}

22Deployment Reference: Setting up Your Voice Agent

Deploying a real-time LLM-powered voice bot can be achieved using cloud functions or edge devices. For server-side or backend integrations, using a

python video and audio calling sdk

can simplify the process of adding audio and video communication features to your Python applications.1// Example: Express.js endpoint for voice agent

2app.post("/voice-agent", async (req, res) => {

3 const audioInput = req.body.audio;

4 await voiceAgent(audioInput);

5 res.send({ status: "ok" });

6});

7Customization:

- Modify the

synthesizeSpeechfunction to select voices, accents, and tones for unique personalities. - Use prompt engineering to tune your agent's behavior and context retention.

- For voice-enabled group interactions, integrating a

Voice SDK

can enable scalable live audio rooms and enhance conversational experiences.

These LLM examples for voice agent development offer a robust foundation for building both simple and advanced conversational systems in 2025.

Best Practices for Building LLM Voice Agents

To ensure reliable, efficient, and user-friendly voice agents, consider the following best practices:

Data Privacy

- Use anonymization and encryption for user data.

- Comply with GDPR, CCPA, and other relevant regulations.

Latency and Efficiency

- Minimize inference time by caching frequent responses and using on-device LLMs when possible.

- Optimize STT and TTS pipeline latency for real-time interactions.

- For applications requiring telephony integration, a

phone call api

can help you manage calls efficiently and reliably.

Prompt Engineering for Voice Agents

Prompt design is critical for maintaining context and ensuring relevant responses. Here's an example:

1const prompt = `You are a helpful voice assistant. If the user mentions "order status", ask for the order number.\nUser: ${userInput}\nAssistant:`;

2Voice UX and Accessibility

- Offer multiple voice personalities and languages.

- Provide visual feedback for speech input and output.

- Ensure compatibility with assistive technologies.

- For seamless integration of live audio features, consider leveraging a

Voice SDK

to enhance accessibility and user engagement.

By following these guidelines, developers can create LLM-powered voice bots that are inclusive, fast, and secure.

Challenges and Limitations

While LLM examples for voice agent systems demonstrate impressive capabilities, several challenges remain:

- Context Limitations: LLMs may lose context over long conversations or complex topics.

- Environmental Impact: Training and running large models require significant computational resources.

- Error Handling: LLMs can occasionally generate hallucinated or irrelevant responses. Implement fallback strategies and monitor outputs.

Future Trends in LLM Voice Agents

In 2025, expect LLM examples for voice agent systems to leverage real-time, multimodal, and highly personalized AI. Users will demand adaptive voice assistants that integrate seamlessly across devices and contexts, with evolving standards for accessibility and trust.

Conclusion

LLM examples for voice agent development empower engineers to build highly capable, context-aware conversational AI. With advances in LLMs, STT, TTS, and SDKs, creating voice agents is more accessible than ever. Start experimenting with the provided code and frameworks to unlock the potential of voice UX in 2025 and beyond. If you're ready to build your own voice agent or communication solution,

Try it for free

and explore the possibilities today.Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ