Introduction

Large Language Models (LLMs) have become foundational to the evolution of conversational AI, powering everything from chatbots to complex voice assistants. These models, trained on enormous datasets, can understand and generate human-like language, making them ideal for natural and dynamic conversations. With the growing adoption of voice-driven interfaces, the demand for the best LLM for voice bot applications has surged in 2025. Selecting the right LLM impacts everything from latency and multilingual support to context retention and scalability. In this guide, we explore what makes an LLM suitable for voice bots, compare leading models, and provide practical insights for developers looking to build next-generation voice AI.

What is an LLM for Voice Bots?

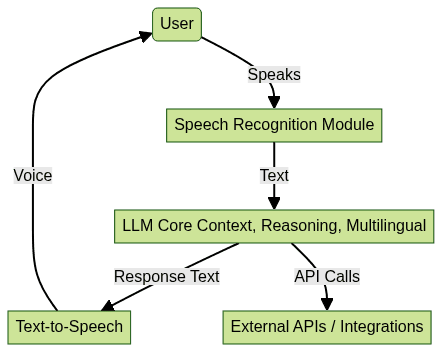

A large language model (LLM) is a deep neural network trained to process, understand, and generate text that mimics human conversation. When applied to voice bots, LLMs go beyond simple text parsing—they power robust conversational AI capable of understanding speech, maintaining context, and delivering responses in real time. The shift from traditional text-based LLMs to those optimized for voice AI is driven by advances in speech recognition, real-time processing, and natural language understanding. Today’s voice bots leverage LLMs for speech-to-text, intent recognition, and dynamic dialogue management, enabling seamless, context-aware interactions across platforms and devices. For developers aiming to add real-time audio features, integrating a

Voice SDK

can streamline the process and enhance user experience.Core Features of the Best LLM for Voice Bot

Selecting the best LLM for voice bot hinges on several technical features:

- Real-Time Response and Low Latency: Voice bots require near-instant feedback to maintain a natural conversation flow. LLMs optimized for low inference latency are crucial for real-time voice agents.

- Context Retention & Agentic Memory: The ability to remember context across turns (and even sessions) ensures more coherent and personalized interactions.

- Multilingual Support & Speech-to-Speech Reasoning: Leading LLMs support multiple languages, dialects, and even cross-lingual conversations, essential for global deployments. Speech-to-speech reasoning enables seamless voice translation and paraphrasing.

- Integration with APIs & Platforms: Modern LLMs offer robust APIs and SDKs for easy integration with popular voice bot platforms, enabling scalable deployment across enterprise and consumer environments. Leveraging a

Video Calling API

or aLive Streaming API SDK

can further expand your bot’s communication capabilities.

Top LLM Models for Voice Bots in 2024

DiVA Llama 3 V0 8b

DiVA Llama 3 V0 8b is a cutting-edge LLM tailored for speech-based applications. It’s trained on vast multilingual voice datasets and incorporates real-time speech-to-text and text-to-speech modules. DiVA Llama 3’s agentic memory tracks dialogue context across extended conversations, making it ideal for enterprise voice bots, customer support, and voice-enabled devices. Its API allows seamless integration, and its architecture is optimized for low-latency inference, ensuring quick, human-like responses. For those building voice bots that handle phone interactions, integrating a

phone call api

can be invaluable for connecting with users over traditional telephony networks.Ultravox

Ultravox introduces a Speech Language Model (SLM) approach, directly ingesting and understanding speech without intermediate text conversion. Its native speech reasoning engine allows for natural, fluid, and fast dialogues. Ultravox excels in speech-to-speech tasks, supports a broad range of languages, and is engineered for low-latency edge deployments. Its API supports both cloud and on-premises scenarios, making it a top choice for privacy-sensitive industries. Developers looking for a robust

Voice SDK

can leverage such tools to accelerate integration and deployment.DeepSeek-V3

DeepSeek-V3 leverages a Mixture-of-Experts (MoE) architecture, enabling massive scalability for voice bot applications. Its modular design allows developers to fine-tune components for specific speech recognition, translation, or dialogue tasks. DeepSeek-V3 is known for its high benchmark scores in multilingual and low-resource language scenarios, making it a strong contender for global voice AI solutions. If you’re working with Python, a

python video and audio calling sdk

can be a powerful addition to your toolkit for building advanced voice and video features.Aivah

Aivah is a multimodal LLM designed for easy, no-code deployment of voice bots. It combines speech, text, and visual reasoning for richer conversational experiences. With its visual programming interface, developers and non-developers alike can launch voice bots without writing extensive code. Aivah’s scalable cloud backend ensures robust performance in enterprise settings. To further enhance your bot’s capabilities, consider integrating a

Voice SDK

for seamless audio room experiences.Millis AI

Millis AI stands out for its ultra-low latency and straightforward integration process. It’s optimized for edge devices, making it perfect for IoT and embedded voice applications where response time is critical. For projects requiring voice communication over phone lines, a

phone call api

can be essential for bridging digital and telephony channels.Comparative Feature Matrix: Best LLM for Voice Bot

| Model | Real-Time Latency | Multilingual | Agentic Memory | Speech-to-Speech | No-Code Deploy | Edge Support |

|---|---|---|---|---|---|---|

| DiVA Llama 3 V0 8b | Yes | Yes | Yes | Partial | No | Yes |

| Ultravox | Yes | Yes | Yes | Yes | No | Yes |

| DeepSeek-V3 | Yes | Yes | Partial | Partial | No | Yes |

| Aivah | Yes | Yes | Yes | Yes | Yes | Partial |

| Millis AI | Ultra-Low | Partial | Partial | No | No | Yes |

How to Choose the Best LLM for Your Voice Bot

Choosing the best LLM for voice bot in 2025 involves careful evaluation of several factors:

- Latency: Low inference times are essential for a seamless voice experience. Evaluate the LLM’s response times under real-world conditions.

- Accuracy: Consider the model’s benchmark scores for speech recognition, intent detection, and conversation quality.

- Cost & Scalability: Assess the model’s pricing (per thousand tokens, per session, or flat rate) and its ability to scale across geographies and workloads.

- Ease of Integration: Look for LLMs with well-documented APIs, SDKs, and support for popular voice bot platforms (Dialogflow, Rasa, Alexa Skills Kit, etc.). Using a

Voice SDK

can simplify the process of adding real-time voice features to your application. - Support & Ecosystem: Consider the availability of community, enterprise support, and integrations with third-party services.

Use Case Matching

- Customer Support Automation: DiVA Llama 3 V0 8b and Ultravox offer strong context retention and speech reasoning.

- Virtual Assistants: Aivah and DeepSeek-V3 bring multimodal capabilities and easy deployment.

- Voice-Enabled Devices: Millis AI is optimal for edge devices due to its ultra-low latency.

Pricing and Cost-Effectiveness

Most providers offer tiered pricing based on usage, with discounts for high-volume or enterprise plans. Open-source options may reduce licensing costs but require investment in infrastructure and maintenance.

Code Integration Example

Here’s a Python snippet showing basic integration with a hypothetical LLM API (e.g., DiVA Llama 3 V0 8b):

1import requests

2

3API_URL = \"https://api.divallama3.com/v1/voicebot\"

4headers = {\"Authorization\": \"Bearer YOUR_API_KEY\"}

5payload = {"text": "What\'s the weather today?", "language": "en"}

6

7response = requests.post(API_URL, headers=headers, json=payload)

8if response.ok:

9 voice_response = response.json()["voice_output"]

10 print(voice_response)

11else:

12 print("Error:", response.status_code)

13Implementation Example: Integrating a Leading LLM with a Voice Bot

To implement the best LLM for voice bot in production, follow these steps:

- Choose Your LLM Provider: Sign up for access to the LLM (e.g., Ultravox, DiVA Llama 3).

- Configure API Keys and Endpoints: Securely store your credentials and endpoint URLs.

- Integrate Speech-to-Text (STT) and Text-to-Speech (TTS): Use SDKs or third-party APIs for seamless audio processing.

- Implement the LLM Call: Pass user input (converted to text) to the LLM API for conversational logic.

Example: Python Integration with Ultravox

1import requests

2import soundfile as sf

3

4API_URL = \"https://api.ultravox.ai/v1/converse\"

5headers = {\"Authorization\": \"Bearer YOUR_VOX_API_KEY\"}

6

7def send_audio(audio_path):

8 with open(audio_path, 'rb') as audio_file:

9 files = {"audio": audio_file}

10 response = requests.post(API_URL, headers=headers, files=files)

11 if response.ok:

12 result = response.json()

13 print("Transcription:", result["transcript"])

14 print("LLM Response:", result["response_text"])

15 else:

16 print("Error:", response.status_code)

17

18send_audio("sample_user_input.wav")

19Performance Optimization Tips

- Batch Requests: Where possible, batch multiple requests to minimize overhead.

- Context Windows: Use session tokens or conversation history APIs to maintain context.

- Multilingual Support: Set language parameters dynamically based on user profile or input.

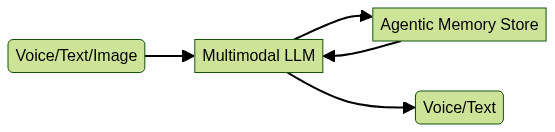

Advanced Capabilities: Multimodal Reasoning & Agentic Memory

Modern voice bots increasingly rely on multimodal LLMs—models that process speech, text, and sometimes images or video. This enables richer, more context-aware conversations. Agentic memory allows bots to remember user preferences, prior topics, and even emotional tone across sessions, enhancing personalization and engagement. If you’re interested in experimenting with these capabilities, you can

Try it for free

and start building your own advanced voice bot.

Challenges and Limitations of LLMs for Voice Bots

Despite their power, LLMs for voice bots face notable challenges:

- Context Window Limits: Most LLMs have a finite context window, which can affect long conversations.

- Hallucinations: LLMs may generate plausible-sounding but inaccurate responses.

- Hardware/Compute Requirements: Real-time voice LLMs require significant GPU/TPU resources, especially for on-premises deployments.

- Privacy & Compliance: Handling voice data in regulated sectors (healthcare, finance) necessitates strict privacy controls and auditability.

Conclusion: Future Trends in Best LLM for Voice Bot

The landscape for the best LLM for voice bot continues to evolve rapidly. Expect advances in agentic AI, speech-to-speech reasoning, and real-time multilingual support throughout 2025. Developers and enterprises should focus on models that balance latency, scalability, and integration flexibility. Stay tuned as open-source LLMs and low-code platforms democratize access to next-generation voice AI. Ready to build? Start prototyping your voice bot with a leading LLM today!

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ