Introduction to AI Voice Agents in Voice Agent API

In today's digital landscape, AI Voice Agents are transforming the way we interact with technology. These agents, powered by sophisticated algorithms, can understand and respond to human speech, making them invaluable in various industries, including customer service, healthcare, and more.

What is an AI Voice Agent?

An AI Voice Agent is a software application that uses artificial intelligence to process and respond to voice commands. These agents are capable of understanding natural language, processing the information, and delivering a coherent response. They are often integrated into systems to automate tasks, provide customer support, and enhance user experience.

Why are they important for the Voice Agent API industry?

AI Voice Agents are crucial in the voice agent API industry because they streamline interactions between users and systems. They enable hands-free operations, improve accessibility, and can be customized for specific applications, making them a versatile tool for businesses.

Core Components of a Voice Agent

The primary components of a voice agent include:

- Speech-to-Text (STT): Converts spoken language into text.

- Large Language Model (LLM): Processes the text to understand and generate responses.

- Text-to-Speech (TTS): Converts the text response back into speech.

What You'll Build in This Tutorial

In this tutorial, you'll learn how to

build an AI Voice Agent with VideoSDK

using the VideoSDK framework. We'll guide you through setting up the environment, creating the agent, and testing it in a playground environment.Architecture and Core Concepts

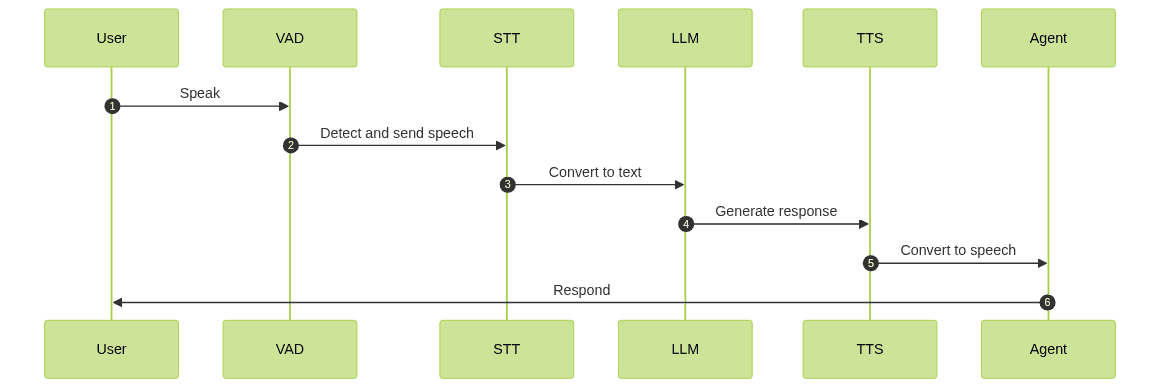

High-Level Architecture Overview

The architecture of an AI Voice Agent involves several stages, from capturing user speech to generating a response. Here's a simplified flow:

- User Speech: The user speaks into the microphone.

- Voice

Activity Detection

(VAD): Detects when the user starts and stops speaking. - Speech-to-Text (STT): Converts speech into text.

- Large Language Model (LLM): Processes the text to understand the intent and generate a response.

- Text-to-Speech (TTS): Converts the response text back into speech.

- Agent Response: The agent speaks back to the user.

Understanding Key Concepts in the VideoSDK Framework

- Agent: The core class representing your bot, handling interactions and responses.

- CascadingPipeline: Manages the flow of audio processing through various stages like STT, LLM, and TTS.

- VAD & TurnDetector: Tools to determine when the agent should listen and respond, ensuring seamless interaction.

Setting Up the Development Environment

Prerequisites

Before you begin, ensure you have:

- Python 3.11+ installed on your machine.

- A VideoSDK Account. You can sign up at app.videosdk.live.

Step 1: Create a Virtual Environment

Creating a virtual environment helps manage dependencies and avoid conflicts. Run the following command:

1python3 -m venv venv

2source venv/bin/activate # On Windows use `venv\Scripts\activate`

3Step 2: Install Required Packages

Install the necessary packages using pip:

1pip install videosdk

2pip install python-dotenv

3Step 3: Configure API Keys in a .env file

Create a

.env file in your project directory and add your VideoSDK API keys:1VIDEOSDK_API_KEY=your_api_key_here

2Building the AI Voice Agent: A Step-by-Step Guide

Here is the complete, runnable code for the AI Voice Agent using the VideoSDK framework:

1import asyncio, os

2from videosdk.agents import Agent, AgentSession, CascadingPipeline, JobContext, RoomOptions, WorkerJob, ConversationFlow

3from videosdk.plugins.silero import SileroVAD

4from videosdk.plugins.turn_detector import TurnDetector, pre_download_model

5from videosdk.plugins.deepgram import DeepgramSTT

6from videosdk.plugins.openai import OpenAILLM

7from videosdk.plugins.elevenlabs import ElevenLabsTTS

8from typing import AsyncIterator

9

10# Pre-downloading the Turn Detector model

11pre_download_model()

12

13agent_instructions = "You are a 'Voice Agent API' specialist integrated within the VideoSDK framework. Your persona is that of a knowledgeable and efficient technical assistant. Your primary capabilities include: 1) Providing detailed information about the 'Voice Agent API', including its features, integration steps, and best practices. 2) Assisting developers with troubleshooting common issues related to the API. 3) Offering guidance on optimizing API usage for various applications. However, you must adhere to the following constraints: 1) You are not a substitute for official technical support and should direct users to official documentation or support channels for complex issues. 2) You must not provide any proprietary or confidential information. 3) Always remind users to review the latest API documentation for updates and changes."

14

15class MyVoiceAgent(Agent):

16 def __init__(self):

17 super().__init__(instructions=agent_instructions)

18 async def on_enter(self): await self.session.say("Hello! How can I help?")

19 async def on_exit(self): await self.session.say("Goodbye!")

20

21async def start_session(context: JobContext):

22 # Create agent and conversation flow

23 agent = MyVoiceAgent()

24 conversation_flow = ConversationFlow(agent)

25

26 # Create pipeline

27 pipeline = CascadingPipeline(

28 stt=DeepgramSTT(model="nova-2", language="en"),

29 llm=OpenAILLM(model="gpt-4o"),

30 tts=ElevenLabsTTS(model="eleven_flash_v2_5"),

31 vad=SileroVAD(threshold=0.35),

32 turn_detector=TurnDetector(threshold=0.8)

33 )

34

35 session = AgentSession(

36 agent=agent,

37 pipeline=pipeline,

38 conversation_flow=conversation_flow

39 )

40

41 try:

42 await context.connect()

43 await session.start()

44 # Keep the session running until manually terminated

45 await asyncio.Event().wait()

46 finally:

47 # Clean up resources when done

48 await session.close()

49 await context.shutdown()

50

51def make_context() -> JobContext:

52 room_options = RoomOptions(

53 # room_id="YOUR_MEETING_ID", # Set to join a pre-created room; omit to auto-create

54 name="VideoSDK Cascaded Agent",

55 playground=True

56 )

57

58 return JobContext(room_options=room_options)

59

60if __name__ == "__main__":

61 job = WorkerJob(entrypoint=start_session, jobctx=make_context)

62 job.start()

63Step 4.1: Generating a VideoSDK Meeting ID

To create a meeting ID, use the following

curl command:1curl -X POST "https://api.videosdk.live/v1/meetings" \

2-H "Authorization: Bearer YOUR_API_KEY" \

3-H "Content-Type: application/json"

4Step 4.2: Creating the Custom Agent Class

The

MyVoiceAgent class extends the base Agent class. It defines the behavior of the agent when it enters and exits a session:1class MyVoiceAgent(Agent):

2 def __init__(self):

3 super().__init__(instructions=agent_instructions)

4 async def on_enter(self): await self.session.say("Hello! How can I help?")

5 async def on_exit(self): await self.session.say("Goodbye!")

6Step 4.3: Defining the Core Pipeline

The

Cascading pipeline in AI voice Agents

is crucial as it defines the flow of audio processing:1pipeline = CascadingPipeline(

2 stt=DeepgramSTT(model="nova-2", language="en"),

3 llm=OpenAILLM(model="gpt-4o"),

4 tts=ElevenLabsTTS(model="eleven_flash_v2_5"),

5 vad=SileroVAD(threshold=0.35),

6 turn_detector=TurnDetector(threshold=0.8)

7)

8Each plugin in the pipeline serves a specific function:

- STT (Deepgram): Converts speech to text.

- LLM (OpenAI): Processes the text and generates responses.

- TTS (ElevenLabs): Converts the response text back to speech.

- VAD (Silero): Detects when the user is speaking.

- TurnDetector: Determines when to switch between listening and speaking.

Step 4.4: Managing the Session and Startup Logic

The

start_session function initializes the session and manages the lifecycle of the agent:1async def start_session(context: JobContext):

2 # Create agent and conversation flow

3 agent = MyVoiceAgent()

4 conversation_flow = ConversationFlow(agent)

5

6 # Create pipeline

7 pipeline = CascadingPipeline(

8 stt=DeepgramSTT(model="nova-2", language="en"),

9 llm=OpenAILLM(model="gpt-4o"),

10 tts=ElevenLabsTTS(model="eleven_flash_v2_5"),

11 vad=SileroVAD(threshold=0.35),

12 turn_detector=TurnDetector(threshold=0.8)

13 )

14

15 session = AgentSession(

16 agent=agent,

17 pipeline=pipeline,

18 conversation_flow=conversation_flow

19 )

20

21 try:

22 await context.connect()

23 await session.start()

24 # Keep the session running until manually terminated

25 await asyncio.Event().wait()

26 finally:

27 # Clean up resources when done

28 await session.close()

29 await context.shutdown()

30The

make_context function configures the room options for the session:1def make_context() -> JobContext:

2 room_options = RoomOptions(

3 # room_id="YOUR_MEETING_ID", # Set to join a pre-created room; omit to auto-create

4 name="VideoSDK Cascaded Agent",

5 playground=True

6 )

7

8 return JobContext(room_options=room_options)

9Finally, the script's entry point is defined:

1if __name__ == "__main__":

2 job = WorkerJob(entrypoint=start_session, jobctx=make_context)

3 job.start()

4Running and Testing the Agent

Step 5.1: Running the Python Script

To run your agent, execute the following command:

1python main.py

2Step 5.2: Interacting with the Agent in the Playground

Once the script is running, you'll find a

playground link

in the console. Open it in your browser to interact with your agent.Advanced Features and Customizations

Extending Functionality with Custom Tools

You can extend your agent's functionality by integrating custom tools using the

function_tool feature. This allows you to add new capabilities tailored to your specific needs.Exploring Other Plugins

The VideoSDK framework supports various plugins. Consider exploring other STT, LLM, and TTS options, such as the

Deepgram STT Plugin for voice agent

,OpenAI LLM Plugin for voice agent

, andElevenLabs TTS Plugin for voice agent

to optimize your agent's performance.Troubleshooting Common Issues

API Key and Authentication Errors

Ensure your API keys are correct and properly configured in the

.env file.Audio Input/Output Problems

Check your microphone and speaker settings to ensure they are correctly configured.

Dependency and Version Conflicts

Ensure all dependencies are up-to-date and compatible with your Python version.

Conclusion

Summary of What You've Built

In this tutorial, you've built a fully functional AI Voice Agent using the VideoSDK framework. You've learned how to set up the environment, create a custom agent, and test it in a playground.

Next Steps and Further Learning

To further enhance your skills, explore additional features of the VideoSDK framework, experiment with different plugins, and consider integrating your agent into real-world applications. You can also delve deeper into

AI voice Agent Sessions

and utilize theTurn detector for AI voice Agents

to refine your agent's interaction capabilities.Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ