Introduction to the OpenAI Stream API

The OpenAI API empowers developers to tap into state-of-the-art language models for a variety of applications, from chatbots to content generation. As these models become more prevalent in real-time and interactive systems, the need for faster, more responsive outputs has grown. The OpenAI Stream API addresses this demand by enabling streaming responses, allowing data to flow token-by-token instead of waiting for the entire completion. This approach is crucial for developers and enterprises building real-time apps, conversational agents, or any system where latency and user experience matter. With the OpenAI Stream API, applications can handle user queries more fluidly and deliver a seamless, interactive experience.

How the OpenAI Stream API Works

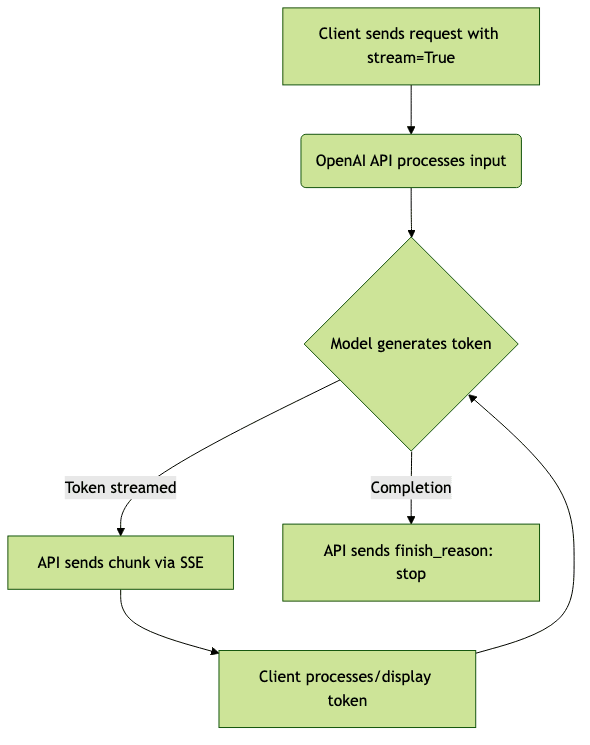

Traditionally, APIs like the OpenAI completions API return a full response only after processing the entire request. While this is straightforward, it can introduce latency—especially with large responses or complex prompts. The OpenAI stream API changes this paradigm by introducing streaming: as soon as the model generates tokens, they're sent to the client incrementally, dramatically reducing perceived wait times.

When you use the

stream=True parameter, the OpenAI API streams response chunks over an HTTP connection via server-sent events (SSE). Each chunk typically contains a small part of the model's output (for example, a few words or tokens), along with metadata like choices and finish_reason. This allows applications to display responses as they're generated, vital for chatbots, real-time editors, live data processing, and more.Use Cases:

- Chatbots: Users see AI-generated responses in real time, improving engagement.

- Real-time Apps: Applications like collaborative editors or customer support tools benefit from lower response times.

- Data Processing: Streamed outputs enable on-the-fly transformations or moderation.

Mermaid diagram: Data Flow in OpenAI Stream API

Setting Up and Authenticating with OpenAI Stream API

Before streaming with the OpenAI API, ensure you have:

- An OpenAI API key

- Appropriate SDKs installed (Python, Node.js, etc.)

- Environment variables set securely to protect credentials

Installing the SDKs:

- Python:

bash pip install openai - Node.js:

bash npm install openai

Authentication Example:

Python

1import openai

2import os

3openai.api_key = os.environ[\"OPENAI_API_KEY\"]

4Node.js

1const { OpenAIApi, Configuration } = require(\"openai\");

2const configuration = new Configuration({

3 apiKey: process.env.OPENAI_API_KEY,

4});

5const openai = new OpenAIApi(configuration);

6This setup ensures secure, authenticated access to the OpenAI stream API for your applications.

Making Streamed Requests with OpenAI API

Basic Streaming Request Structure

A streamed request to the OpenAI stream API typically requires:

model: The specific model to use (e.g.,gpt-4)messages: Conversation history (for chat completions)stream: Set toTrueto enable streaming- Optional:

functionsortool_callsfor advanced workflows

Python Example: Streaming Chat Completion

1import openai

2response = openai.ChatCompletion.create(

3 model=\"gpt-4\",

4 messages=[{"role": "user", "content": "Tell me a joke."}],

5 stream=True

6)

7for chunk in response:

8 print(chunk['choices'][0]['delta'].get('content', ''), end='', flush=True)

9Handling Streaming Responses

Streaming responses from the OpenAI API are sent as discrete JSON chunks. Each chunk contains:

delta: The partial content or function callchoices: Array of completion choicesfinish_reason: Indicates why the stream ended (stop,length, etc.)

To process a streamed response, loop through each chunk, extract the new content, and render or process it incrementally.

Processing Streamed Chunks in Python

1full_reply = ""

2for chunk in response:

3 delta = chunk['choices'][0]['delta']

4 if 'content' in delta:

5 full_reply += delta['content']

6 if chunk['choices'][0]['finish_reason']:

7 break

8print(full_reply)

9Mermaid Diagram: Streaming Response Lifecycle

Advanced Streaming Techniques and Patterns

Streaming to the Frontend (React/JS Example)

Often, you want to display streamed responses to users as they arrive. This requires pushing data from your backend (where the OpenAI stream API response is handled) to the frontend in real time—commonly done with WebSockets or server-sent events.

Node.js/Express Backend Streaming Example:

1const express = require(\"express\");

2const { OpenAIApi, Configuration } = require(\"openai\");

3const app = express();

4const configuration = new Configuration({ apiKey: process.env.OPENAI_API_KEY });

5const openai = new OpenAIApi(configuration);

6

7app.get('/stream', async (req, res) => {

8 res.set({

9 'Content-Type': 'text/event-stream',

10 'Cache-Control': 'no-cache',

11 'Connection': 'keep-alive',

12 });

13 const completion = await openai.createChatCompletion({

14 model: \"gpt-4\",

15 messages: [{ role: "user", content: "Stream a fun fact." }],

16 stream: true,

17 }, { responseType: 'stream' });

18

19 completion.data.on('data', data => {

20 res.write(`data: ${data}\n\n`);

21 });

22

23 completion.data.on('end', () => {

24 res.end();

25 });

26});

27Frontend (React) Example:

1const [reply, setReply] = useState(\"\");

2useEffect(() => {

3 const eventSource = new EventSource('/stream');

4 eventSource.onmessage = e => {

5 setReply(prev => prev + e.data);

6 };

7 return () => eventSource.close();

8}, []);

9This approach ensures users see content as soon as it's generated by the OpenAI stream API.

Error Handling and Edge Cases

While streaming improves UX, it introduces new challenges:

- Incomplete Streams: Network interruptions can break streams; check for missing finish_reason.

finish_reason: stop: Indicates a natural end; handle other reasons (e.g.,lengthlimits) gracefully.- Function/Tool Calls: When using OpenAI function calls, streamed chunks may contain partial function arguments—collect and assemble them correctly before execution.

- Moderation: Streaming exposes partial completions; ensure robust moderation at both the chunk and full-response levels to avoid unsafe content leaks.

Performance, Reliability, and Cost

The OpenAI stream API offers significant latency reduction by delivering content as soon as it's available. This is especially important in real-time apps, where even small delays can degrade user experience.

- Latency: Streaming reduces perceived latency since users see a response unfold instantly, instead of waiting for a full reply.

- Cost: Token usage is reported incrementally, allowing for precise cost monitoring, but total token consumption is similar to non-streaming modes.

- Best Practices:

- Use streaming for chatbots and interactive UIs

- Monitor for dropped or incomplete streams in production

- Log token usage per session for cost tracking

Real-World Examples and Use Cases

Many enterprises and startups leverage the OpenAI stream API for production workloads:

- MagicSchool AI: Uses streaming for instant educational content delivery

- Zencoder: Integrates streaming completions for faster decision support

- MCP Servers: Combine OpenAI API with Microservices Control Plane (MCP) for orchestrating function calls and real-time tool streaming

- OpenAI Responses API: Recent features allow even richer streaming, including function call streaming and async responses for more complex workflows

These examples demonstrate how the OpenAI stream API powers real-time, user-centric applications at scale.

Security, Privacy, and Compliance

When using the OpenAI stream API in production, security and privacy are paramount:

- Token Privacy: Never log or expose sensitive prompts or completions; use encrypted storage for logs if needed

- Moderation: Always apply content moderation, even for partial (streamed) responses, to prevent unsafe content leaks

- Compliance: Ensure your use of the OpenAI stream API adheres to regulatory requirements (e.g., GDPR); avoid sending PII in prompts or completions

Conclusion

The OpenAI stream API unlocks high-performance, real-time AI applications for developers and enterprises. By streaming responses token by token, it enhances interactivity, reduces latency, and enables entirely new user experiences. With robust error handling, security, and best practices, you can confidently bring the power of streaming AI to your next project. Start experimenting today and transform your applications with the OpenAI stream API.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ