Introduction to "Rust WebRTC.rs" Technology

What is Rust WebRTC.rs?

Rust WebRTC.rs is an implementation of

WebRTC

(Web Real-Time Communication) in the Rust programming language. WebRTC is a technology that enables peer-to-peer communication between browsers and mobile applications. It allows the exchange of audio, video, and data streams without the need for an intermediary server, making real-time communication more efficient and reliable. By leveraging Rust, a systems programming language known for its performance and safety, WebRTC.rs offers a powerful solution for building high-performance real-time communication applications.Importance and Advantages of Using Rust for WebRTC

Rust

has gained popularity in recent years due to its focus on safety, concurrency, and performance. These features make Rust an excellent choice for implementing WebRTC, where low latency and high reliability are crucial. Rust's ownership model and strict compile-time checks help prevent common programming errors, such as null pointer dereferencing and data races, which are particularly important in real-time communication applications. Additionally, Rust's performance is comparable to languages like C and C++, ensuring that WebRTC.rs can handle the demands of real-time audio and video processing efficiently.Overview of the webrtc-rs Library

The webrtc-rs library is a comprehensive implementation of the WebRTC protocol in Rust. It provides all the necessary components to establish peer-to-peer connections, including support for signaling, session establishment, and media stream handling. The library is designed to be modular and extensible, allowing developers to customize and extend its functionality to meet their specific needs. With webrtc-rs, developers can build robust real-time communication applications in Rust, leveraging the language's safety and performance features.

By using Rust WebRTC.rs, developers can create secure, efficient, and scalable real-time communication applications. Whether you are building a video conferencing tool, a real-time collaboration platform, or any application that requires peer-to-peer communication, Rust WebRTC.rs provides the tools and capabilities needed to bring your project to life.

Getting Started with the Code

Create a New Rust WebRTC.rs App

Before we dive into the code, ensure you have Rust installed on your system. If not, you can install it from the official

Rust website

. Once Rust is installed, you can create a new project using Cargo, Rust's package manager.sh

1cargo new webrtc_app

2cd webrtc_app

3This command creates a new directory named

webrtc_app with the necessary files for a basic Rust project.Install

Next, you need to add the

webrtc-rs library as a dependency in your Cargo.toml file. Open Cargo.toml and add the following line under [dependencies]:toml

1 webrtc = "0.6" # Replace with the latest version

2After adding the dependency, run

cargo build to download and compile the library.Structure of the Project

A typical Rust project structure for a WebRTC application might look like this:

1webrtc_app/

2├── Cargo.toml

3└── src

4 └── main.rs

5- Cargo.toml: Contains metadata about your project and its dependencies.

- src/main.rs: The main entry point of your application.

App Architecture

- Signaling Server: Manages the exchange of session control messages. This can be implemented using any server-side technology, but for this tutorial, we will focus on the client-side code.

- Peer Connection: Establishes a connection between peers using the WebRTC API.

- Media Streams: Handles the transmission of audio and video data.

Sample Code Structure

In this section, we'll outline the basic setup of a WebRTC application using Rust.

[a] Set Up the Main Function

Open

src/main.rs and set up the main function:Rust

1use webrtc::api::media_engine::MediaEngine;

2use webrtc::api::APIBuilder;

3use webrtc::peer_connection::configuration::RTCConfiguration;

4use webrtc::peer_connection::peer_connection_factory::PeerConnectionFactory;

5

6#[tokio::main]

7async fn main() {

8 // Create a MediaEngine to manage media codecs

9 let mut media_engine = MediaEngine::default();

10 media_engine.register_default_codecs().unwrap();

11

12 // Create the API object

13 let api = APIBuilder::new()

14 .with_media_engine(media_engine)

15 .build();

16

17 // Create a new RTCConfiguration

18 let config = RTCConfiguration::default();

19

20 // Create a PeerConnectionFactory

21 let peer_connection = api.new_peer_connection(config).await.unwrap();

22

23 // Additional setup code here...

24}

25This code initializes the MediaEngine, API, and PeerConnectionFactory, which are essential components for a WebRTC application.

[b] Implement Signaling

While the full implementation of a signaling server is beyond the scope of this tutorial, you can use existing libraries like

tokio and warp to set up a WebSocket server for signaling.Rust

1use warp::Filter;

2

3#[tokio::main]

4async fn main() {

5 let routes = warp::path("signaling")

6 .and(warp::ws())

7 .map(|ws: warp::ws::Ws| {

8 ws.on_upgrade(|websocket| async move {

9 // Handle WebSocket connection

10 })

11 });

12

13 warp::serve(routes).run(([127, 0, 0, 1], 3030)).await;

14}

15This example sets up a basic WebSocket server using

warp.[c] Handle Media Streams

To handle media streams, you need to configure the peer connection to receive and send media. This involves setting up event handlers for ICE candidates and media tracks.

Rust

1peer_connection.on_ice_candidate(Box::new(|candidate| {

2 if let Some(candidate) = candidate {

3 println!("ICE candidate: {:?}", candidate);

4 }

5 Box::pin(async {})

6})).await;

7

8peer_connection.on_track(Box::new(|track, _| {

9 println!("Received track: {:?}", track);

10 Box::pin(async {})

11})).await;

12This code sets up handlers for ICE candidates and incoming media tracks.

By following these steps, you can set up a basic Rust WebRTC.rs application. In the next sections, we will dive deeper into each component and provide more detailed implementation steps.

Step 1: Get Started with Main.rs

In this section, we will delve into the specifics of creating and configuring the

main.rs file, which serves as the entry point for our Rust WebRTC.rs application. This file will initialize the WebRTC components and set up the basic infrastructure needed for real-time communication.Setting Up the Main Function

First, let's start by setting up the main function in

src/main.rs. This function will initialize the necessary components and prepare our application to handle WebRTC connections.Rust

1use webrtc::api::media_engine::MediaEngine;

2use webrtc::api::APIBuilder;

3use webrtc::peer_connection::configuration::RTCConfiguration;

4use webrtc::peer_connection::peer_connection_factory::PeerConnectionFactory;

5use webrtc::peer_connection::RTCPeerConnection;

6use webrtc::ice_transport::ice_candidate::RTCIceCandidate;

7use webrtc::rtp_transceiver::rtp_codec::RTCRtpCodecCapability;

8use tokio::sync::mpsc;

9use std::sync::Arc;

10

11#[tokio::main]

12async fn main() {

13 // Create a MediaEngine to manage media codecs

14 let mut media_engine = MediaEngine::default();

15 media_engine.register_default_codecs().unwrap();

16

17 // Create the API object

18 let api = APIBuilder::new()

19 .with_media_engine(media_engine)

20 .build();

21

22 // Create a new RTCConfiguration

23 let config = RTCConfiguration::default();

24

25 // Create a PeerConnectionFactory

26 let peer_connection = Arc::new(api.new_peer_connection(config).await.unwrap());

27

28 // Set up signaling (e.g., WebSocket) here...

29

30 // Set up ICE Candidate handling

31 setup_ice_candidates(peer_connection.clone()).await;

32

33 // Set up track handling

34 setup_tracks(peer_connection.clone()).await;

35

36 println!("WebRTC application has started successfully.");

37}

38

39async fn setup_ice_candidates(peer_connection: Arc<RTCPeerConnection>) {

40 peer_connection.on_ice_candidate(Box::new(|candidate: Option<RTCIceCandidate>| {

41 if let Some(candidate) = candidate {

42 println!("New ICE candidate: {:?}", candidate);

43 // Send the candidate to the remote peer through signaling server

44 }

45 Box::pin(async {})

46 })).await;

47}

48

49async fn setup_tracks(peer_connection: Arc<RTCPeerConnection>) {

50 peer_connection.on_track(Box::new(|track, _| {

51 println!("New track received: {:?}", track);

52 Box::pin(async {})

53 })).await;

54}

55Importing Necessary Crates and Modules

We start by importing the necessary crates and modules from the

webrtc-rs library. These imports include components for managing media, peer connections, ICE candidates, and RTP codecs.Rust

1use webrtc::api::media_engine::MediaEngine;

2use webrtc::api::APIBuilder;

3use webrtc::peer_connection::configuration::RTCConfiguration;

4use webrtc::peer_connection::peer_connection_factory::PeerConnectionFactory;

5use webrtc::peer_connection::RTCPeerConnection;

6use webrtc::ice_transport::ice_candidate::RTCIceCandidate;

7use webrtc::rtp_transceiver::rtp_codec::RTCRtpCodecCapability;

8use tokio::sync::mpsc;

9use std::sync::Arc;

10Basic Setup for a WebRTC Server

[a] Create MediaEngine

The

MediaEngine is responsible for managing media codecs. We register the default codecs to ensure that our application can handle common audio and video formats.Rust

1 let mut media_engine = MediaEngine::default();

2 media_engine.register_default_codecs().unwrap();

3[b] Create API Object

The API object is created using the

APIBuilder and configured with the MediaEngine. This object will be used to create peer connections.Rust

1 let api = APIBuilder::new()

2 .with_media_engine(media_engine)

3 .build();

4[c] Configure RTC

The

RTCConfiguration object is used to configure the WebRTC settings, such as ICE servers. For simplicity, we use the default configuration here.Rust

1 let config = RTCConfiguration::default();

2[d] Create Peer Connection

The

PeerConnectionFactory is used to create a new peer connection. This peer connection will handle the actual WebRTC communication.Rust

1 let peer_connection = Arc::new(api.new_peer_connection(config).await.unwrap());

2Handling ICE Candidates

ICE (Interactive Connectivity Establishment) candidates are essential for establishing a connection between peers. We set up a handler to process new ICE candidates and send them to the remote peer through our signaling server.

Rust

1async fn setup_ice_candidates(peer_connection: Arc<RTCPeerConnection>) {

2 peer_connection.on_ice_candidate(Box::new(|candidate: Option<RTCIceCandidate>| {

3 if let Some(candidate) = candidate {

4 println!("New ICE candidate: {:?}", candidate);

5 // Send the candidate to the remote peer through signaling server

6 }

7 Box::pin(async {})

8 })).await;

9}

10Handling Media Tracks

To handle incoming media tracks, we set up a handler that processes new tracks as they are received. This handler will print the details of the new track and can be extended to display the media in a UI.

Rust

1async fn setup_tracks(peer_connection: Arc<RTCPeerConnection>) {

2 peer_connection.on_track(Box::new(|track, _| {

3 println!("New track received: {:?}", track);

4 Box::pin(async {})

5 })).await;

6}

7By following these steps, you have set up the basic infrastructure needed for a Rust WebRTC.rs application. The next sections will guide you through creating the user interface and handling user interactions to build a fully functional WebRTC application.

Step 2: Wireframe All the Components

In this section, we will create a wireframe for our WebRTC application. This wireframe serves as a blueprint for the overall structure and flow of the application, helping us define the core components and their interactions.

Defining the Core Components

To build a functional WebRTC application, we need to identify and define the core components. These components include the signaling server, the peer connection setup, the user interface, and media stream handling.

Signaling Server

- Manages the exchange of signaling messages between peers to establish a connection.

- Handles messages such as offer, answer, and ICE candidates.

Peer Connection Setup

- Establishes the WebRTC peer-to-peer connection.

- Configures media streams and data channels.

User Interface (UI)

- Provides a way for users to join a session and interact with the application.

- Displays local and remote video streams.

Media Stream Handling

- Captures and transmits local media streams.

- Receives and displays remote media streams.

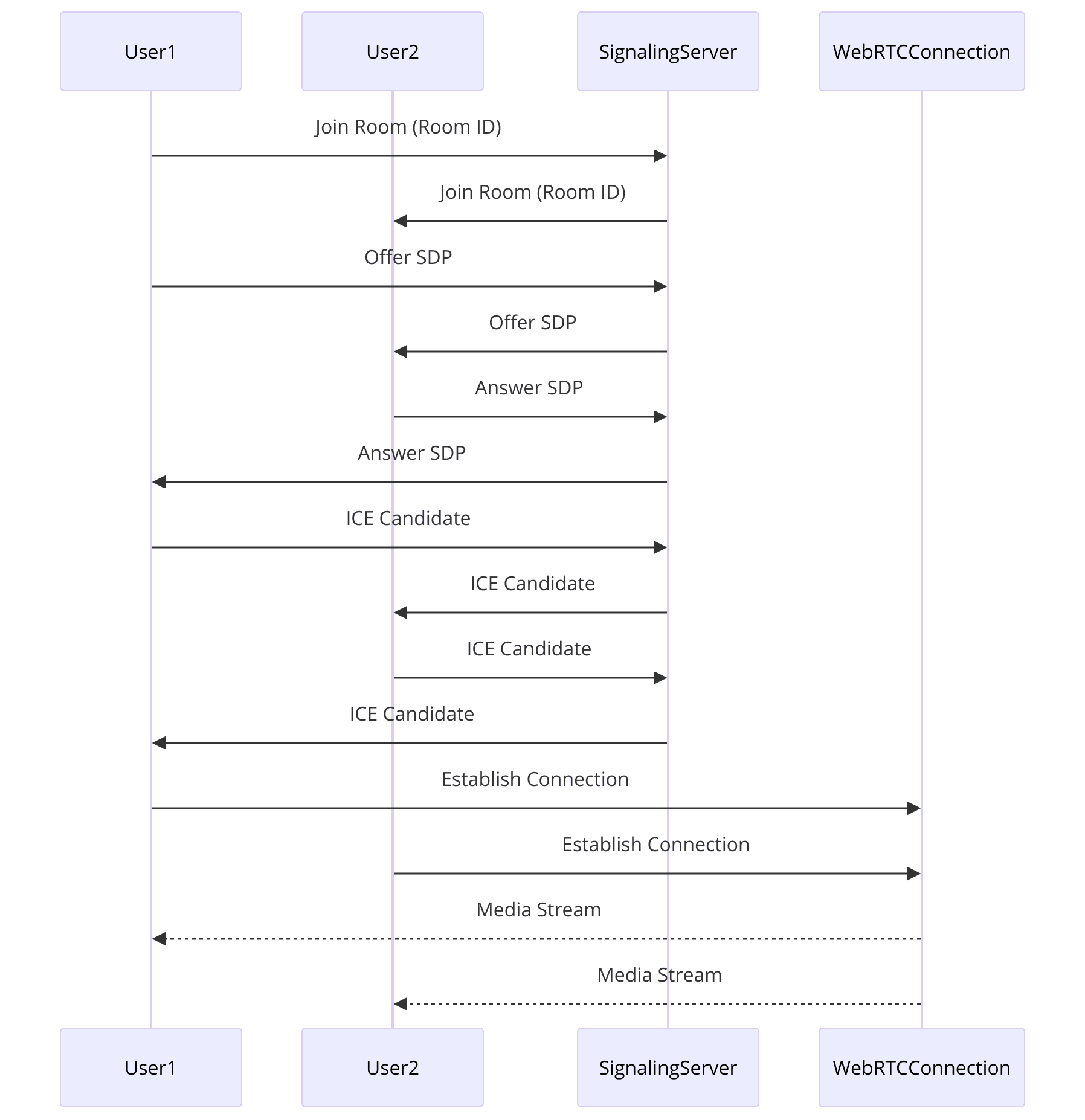

Setting Up the Communication Flow

The communication flow of a WebRTC application involves several key steps:

Signaling Process

- User A sends a join request to the signaling server.

- Signaling server relays the request to User B.

- User B responds with an answer, and the signaling server relays this back to User A.

- Both users exchange ICE candidates through the signaling server.

Peer Connection

- Once the signaling process is complete, a peer-to-peer connection is established.

- Media streams are transmitted directly between peers.

Initializing WebRTC Components

Now, let's integrate these components into our Rust WebRTC.rs application.

[a] Setting Up the Signaling Server

We will use the

warp crate to create a simple WebSocket server for signaling. This server will handle signaling messages and relay them between peers.Add

warp to your Cargo.toml file under [dependencies]:toml

1 warp = "0.3"

2Then, set up the signaling server in

src/main.rs:Rust

1use warp::Filter;

2

3#[tokio::main]

4async fn main() {

5 // Create the signaling server routes

6 let routes = warp::path("signaling")

7 .and(warp::ws())

8 .map(|ws: warp::ws::Ws| {

9 ws.on_upgrade(handle_signaling)

10 });

11

12 // Start the signaling server

13 warp::serve(routes).run(([127, 0, 0, 1], 3030)).await;

14}

15

16// Handle signaling messages

17async fn handle_signaling(ws: warp::ws::WebSocket) {

18 // WebSocket handling code here...

19}

20[b] Creating the User Interface

Next, we need to create the user interface for our application. For simplicity, we'll use HTML and JavaScript to create a basic UI that allows users to join a session and view video streams.

Create an

index.html file in the static directory of your project:HTML

1<!DOCTYPE html>

2<html lang="en">

3<head>

4 <meta charset="UTF-8">

5 <meta name="viewport" content="width=device-width, initial-scale=1.0">

6 <title>Rust WebRTC.rs Application</title>

7</head>

8<body>

9 <h1>Rust WebRTC.rs Application</h1>

10 <div>

11 <input type="text" id="room" placeholder="Enter room name">

12 <button id="join">Join</button>

13 </div>

14 <div id="videos">

15 <video id="localVideo" autoplay muted></video>

16 <video id="remoteVideo" autoplay></video>

17 </div>

18 <script src="app.js"></script>

19</body>

20</html>

21This HTML file includes a text input for entering a room name, a join button, and two video elements for displaying local and remote video streams.

[c] Handling User Interactions

Create an

app.js file in the static directory to handle user interactions and WebRTC functionality:JavaScript

1let localStream;

2let peerConnection;

3const signalingServerUrl = "ws://127.0.0.1:3030/signaling";

4let signalingSocket;

5

6document.getElementById("join").addEventListener("click", async () => {

7 const room = document.getElementById("room").value;

8 if (!room) {

9 alert("Please enter a room name");

10 return;

11 }

12

13 signalingSocket = new WebSocket(signalingServerUrl);

14 signalingSocket.onopen = () => {

15 signalingSocket.send(JSON.stringify({ type: "join", room }));

16 };

17

18 signalingSocket.onmessage = async (message) => {

19 const data = JSON.parse(message.data);

20 switch (data.type) {

21 case "offer":

22 await handleOffer(data.offer);

23 break;

24 case "answer":

25 await handleAnswer(data.answer);

26 break;

27 case "candidate":

28 await handleCandidate(data.candidate);

29 break;

30 default:

31 break;

32 }

33 };

34

35 localStream = await navigator.mediaDevices.getUserMedia({ video: true, audio: true });

36 document.getElementById("localVideo").srcObject = localStream;

37});

38

39async function handleOffer(offer) {

40 peerConnection = createPeerConnection();

41 await peerConnection.setRemoteDescription(new RTCSessionDescription(offer));

42 const answer = await peerConnection.createAnswer();

43 await peerConnection.setLocalDescription(answer);

44 signalingSocket.send(JSON.stringify({ type: "answer", answer }));

45}

46

47function createPeerConnection() {

48 const pc = new RTCPeerConnection();

49 pc.onicecandidate = (event) => {

50 if (event.candidate) {

51 signalingSocket.send(JSON.stringify({ type: "candidate", candidate: event.candidate }));

52 }

53 };

54 pc.ontrack = (event) => {

55 document.getElementById("remoteVideo").srcObject = event.streams[0];

56 };

57 localStream.getTracks().forEach(track => pc.addTrack(track, localStream));

58 return pc;

59}

60

61async function handleAnswer(answer) {

62 await peerConnection.setRemoteDescription(new RTCSessionDescription(answer));

63}

64

65async function handleCandidate(candidate) {

66 await peerConnection.addIceCandidate(new RTCIceCandidate(candidate));

67}

68This JavaScript code handles user interactions, sets up the WebSocket connection for signaling, and manages WebRTC peer connections and media streams.

By defining and integrating these core components, you have created a basic wireframe for your Rust WebRTC.rs application. The next sections will focus on implementing the join screen, controls, participant view, and running the application.

Step 3: Implement Join Screen

In this section, we will implement the join screen for our WebRTC application. The join screen allows users to enter a room name and join a WebRTC session. This involves creating a user interface, handling user inputs, and establishing the WebRTC connection.

Designing the User Interface

The user interface for the join screen consists of an input field for the room name and a button to join the session. We will use HTML to create this UI.

[a] HTML for the Join Screen

Ensure you have the following HTML in your

index.html file:HTML

1<!DOCTYPE html>

2<html lang="en">

3<head>

4 <meta charset="UTF-8">

5 <meta name="viewport" content="width=device-width, initial-scale=1.0">

6 <title>Rust WebRTC.rs Application</title>

7</head>

8<body>

9 <h1>Rust WebRTC.rs Application</h1>

10 <div>

11 <input type="text" id="room" placeholder="Enter room name">

12 <button id="join">Join</button>

13 </div>

14 <div id="videos">

15 <video id="localVideo" autoplay muted></video>

16 <video id="remoteVideo" autoplay></video>

17 </div>

18 <script src="app.js"></script>

19</body>

20</html>

21Handling User Input

Next, we need to handle the user input and initiate the WebRTC connection when the user clicks the "Join" button. We will use JavaScript to accomplish this.

[b] JavaScript for Handling User Input

Add the following JavaScript code in your

app.js file:JavaScript

1let localStream;

2let peerConnection;

3const signalingServerUrl = "ws://127.0.0.1:3030/signaling";

4let signalingSocket;

5

6document.getElementById("join").addEventListener("click", async () => {

7 const room = document.getElementById("room").value;

8 if (!room) {

9 alert("Please enter a room name");

10 return;

11 }

12

13 signalingSocket = new WebSocket(signalingServerUrl);

14 signalingSocket.onopen = () => {

15 signalingSocket.send(JSON.stringify({ type: "join", room }));

16 };

17

18 signalingSocket.onmessage = async (message) => {

19 const data = JSON.parse(message.data);

20 switch (data.type) {

21 case "offer":

22 await handleOffer(data.offer);

23 break;

24 case "answer":

25 await handleAnswer(data.answer);

26 break;

27 case "candidate":

28 await handleCandidate(data.candidate);

29 break;

30 default:

31 break;

32 }

33 };

34

35 localStream = await navigator.mediaDevices.getUserMedia({ video: true, audio: true });

36 document.getElementById("localVideo").srcObject = localStream;

37});

38This code does the following:

- Listens for a click event on the "Join" button.

- Retrieves the room name entered by the user.

- Connects to the signaling server via WebSocket.

- Handles incoming signaling messages.

- Captures the local media stream and displays it in the local video element.

Connecting to the Signaling Server

The signaling server is responsible for facilitating the exchange of signaling messages between peers. In our case, we are using WebSockets to handle signaling.

[c] WebSocket Signaling Server Setup

Ensure you have the following code in your

src/main.rs for the signaling server setup:Rust

1use warp::Filter;

2

3#[tokio::main]

4async fn main() {

5 // Create the signaling server routes

6 let routes = warp::path("signaling")

7 .and(warp::ws())

8 .map(|ws: warp::ws::Ws| {

9 ws.on_upgrade(handle_signaling)

10 });

11

12 // Start the signaling server

13 warp::serve(routes).run(([127, 0, 0, 1], 3030)).await;

14}

15

16// Handle signaling messages

17async fn handle_signaling(ws: warp::ws::WebSocket) {

18 // WebSocket handling code here...

19}

20[d] Handling Signaling Messages

In your JavaScript code, handle the signaling messages to establish the WebRTC connection:

JavaScript

1async function handleOffer(offer) {

2 peerConnection = createPeerConnection();

3 await peerConnection.setRemoteDescription(new RTCSessionDescription(offer));

4 const answer = await peerConnection.createAnswer();

5 await peerConnection.setLocalDescription(answer);

6 signalingSocket.send(JSON.stringify({ type: "answer", answer }));

7}

8

9function createPeerConnection() {

10 const pc = new RTCPeerConnection();

11 pc.onicecandidate = (event) => {

12 if (event.candidate) {

13 signalingSocket.send(JSON.stringify({ type: "candidate", candidate: event.candidate }));

14 }

15 };

16 pc.ontrack = (event) => {

17 document.getElementById("remoteVideo").srcObject = event.streams[0];

18 };

19 localStream.getTracks().forEach(track => pc.addTrack(track, localStream));

20 return pc;

21}

22

23async function handleAnswer(answer) {

24 await peerConnection.setRemoteDescription(new RTCSessionDescription(answer));

25}

26

27async function handleCandidate(candidate) {

28 await peerConnection.addIceCandidate(new RTCIceCandidate(candidate));

29}

30This code handles the following:

- Offer Handling: Sets the remote description and creates an answer to establish the connection.

- Peer Connection Creation: Sets up the peer connection, handles ICE candidates, and adds tracks to the connection.

- Answer Handling: Sets the remote description when an answer is received.

- Candidate Handling: Adds ICE candidates to the peer connection.

By implementing the join screen and handling user interactions, you have set up the initial user interface and the core functionality needed to join a WebRTC session. The next steps will involve implementing controls and enhancing the user experience.

Step 4: Implement Controls

In this section, we will implement the controls for our WebRTC application. These controls allow users to start and stop the WebRTC session and manage their media streams. We will add buttons for these actions and handle the corresponding events.

Adding Control Buttons to the User Interface

First, we need to update our HTML to include buttons for starting and stopping the WebRTC session. These buttons will allow users to control their media streams.

[a] Update HTML with Control Buttons

Modify your

index.html file to add the control buttons:HTML

1<!DOCTYPE html>

2<html lang="en">

3<head>

4 <meta charset="UTF-8">

5 <meta name="viewport" content="width=device-width, initial-scale=1.0">

6 <title>Rust WebRTC.rs Application</title>

7</head>

8<body>

9 <h1>Rust WebRTC.rs Application</h1>

10 <div>

11 <input type="text" id="room" placeholder="Enter room name">

12 <button id="join">Join</button>

13 <button id="start" disabled>Start</button>

14 <button id="stop" disabled>Stop</button>

15 </div>

16 <div id="videos">

17 <video id="localVideo" autoplay muted></video>

18 <video id="remoteVideo" autoplay></video>

19 </div>

20 <script src="app.js"></script>

21</body>

22</html>

23This HTML adds "Start" and "Stop" buttons, which are initially disabled. These buttons will be enabled once the user joins a room.

Handling User Interactions with Control Buttons

Next, we need to handle the events triggered by these buttons in our JavaScript code. We will implement functions to start and stop the media streams and manage the WebRTC session accordingly.

[b] JavaScript for Handling Control Buttons

Update your

app.js file to include the event listeners and functions for the control buttons:JavaScript

1let localStream;

2let peerConnection;

3const signalingServerUrl = "ws://127.0.0.1:3030/signaling";

4let signalingSocket;

5

6document.getElementById("join").addEventListener("click", async () => {

7 const room = document.getElementById("room").value;

8 if (!room) {

9 alert("Please enter a room name");

10 return;

11 }

12

13 signalingSocket = new WebSocket(signalingServerUrl);

14 signalingSocket.onopen = () => {

15 signalingSocket.send(JSON.stringify({ type: "join", room }));

16 };

17

18 signalingSocket.onmessage = async (message) => {

19 const data = JSON.parse(message.data);

20 switch (data.type) {

21 case "offer":

22 await handleOffer(data.offer);

23 break;

24 case "answer":

25 await handleAnswer(data.answer);

26 break;

27 case "candidate":

28 await handleCandidate(data.candidate);

29 break;

30 default:

31 break;

32 }

33 };

34

35 localStream = await navigator.mediaDevices.getUserMedia({ video: true, audio: true });

36 document.getElementById("localVideo").srcObject = localStream;

37

38 // Enable control buttons

39 document.getElementById("start").disabled = false;

40 document.getElementById("stop").disabled = false;

41});

42

43document.getElementById("start").addEventListener("click", () => {

44 startSession();

45});

46

47document.getElementById("stop").addEventListener("click", () => {

48 stopSession();

49});

50

51async function startSession() {

52 // Implement the logic to start the session

53 if (!peerConnection) {

54 peerConnection = createPeerConnection();

55 }

56 const offer = await peerConnection.createOffer();

57 await peerConnection.setLocalDescription(offer);

58 signalingSocket.send(JSON.stringify({ type: "offer", offer }));

59}

60

61function stopSession() {

62 // Implement the logic to stop the session

63 if (peerConnection) {

64 peerConnection.close();

65 peerConnection = null;

66 }

67 document.getElementById("localVideo").srcObject = null;

68 document.getElementById("remoteVideo").srcObject = null;

69 // Disable control buttons

70 document.getElementById("start").disabled = true;

71 document.getElementById("stop").disabled = true;

72}

73

74async function handleOffer(offer) {

75 peerConnection = createPeerConnection();

76 await peerConnection.setRemoteDescription(new RTCSessionDescription(offer));

77 const answer = await peerConnection.createAnswer();

78 await peerConnection.setLocalDescription(answer);

79 signalingSocket.send(JSON.stringify({ type: "answer", answer }));

80}

81

82function createPeerConnection() {

83 const pc = new RTCPeerConnection();

84 pc.onicecandidate = (event) => {

85 if (event.candidate) {

86 signalingSocket.send(JSON.stringify({ type: "candidate", candidate: event.candidate }));

87 }

88 };

89 pc.ontrack = (event) => {

90 document.getElementById("remoteVideo").srcObject = event.streams[0];

91 };

92 localStream.getTracks().forEach(track => pc.addTrack(track, localStream));

93 return pc;

94}

95

96async function handleAnswer(answer) {

97 await peerConnection.setRemoteDescription(new RTCSessionDescription(answer));

98}

99

100async function handleCandidate(candidate) {

101 await peerConnection.addIceCandidate(new RTCIceCandidate(candidate));

102}

103This JavaScript code includes the following:

- Join Button: Handles joining a room and enables the control buttons.

- Start Button: Initiates the WebRTC session by creating an offer and sending it to the signaling server.

- Stop Button: Stops the WebRTC session, closes the peer connection, and resets the video elements.

Implementing the Start and Stop Logic

[a] Start Session

The

startSession function creates a new peer connection (if one does not already exist), creates an offer, sets the local description, and sends the offer to the signaling server.JavaScript

1async function startSession() {

2 if (!peerConnection) {

3 peerConnection = createPeerConnection();

4 }

5 const offer = await peerConnection.createOffer();

6 await peerConnection.setLocalDescription(offer);

7 signalingSocket.send(JSON.stringify({ type: "offer", offer }));

8}

9[b] Stop Session

The

stopSession function closes the peer connection, clears the video elements, and disables the control buttons.JavaScript

1function stopSession() {

2 if (peerConnection) {

3 peerConnection.close();

4 peerConnection = null;

5 }

6 document.getElementById("localVideo").srcObject = null;

7 document.getElementById("remoteVideo").srcObject = null;

8 document.getElementById("start").disabled = true;

9 document.getElementById("stop").disabled = true;

10}

11By implementing these controls, you provide users with the ability to start and stop WebRTC sessions, enhancing the overall functionality and user experience of your Rust WebRTC.rs application. The next section will cover implementing the participant view to manage multiple users in a session.

Step 5: Implement Participant View

In this section, we will implement the participant view for our WebRTC application. This view will manage and display multiple participants in a session, handling the video and audio streams for each user.

Setting Up the Participant View

To support multiple participants, we need to update our HTML and JavaScript to dynamically handle and display multiple video streams.

[a] Update HTML for Participant View

Modify your

index.html file to include a container for multiple video elements:HTML

1<!DOCTYPE html>

2<html lang="en">

3<head>

4 <meta charset="UTF-8">

5 <meta name="viewport" content="width=device-width, initial-scale=1.0">

6 <title>Rust WebRTC.rs Application</title>

7</head>

8<body>

9 <h1>Rust WebRTC.rs Application</h1>

10 <div>

11 <input type="text" id="room" placeholder="Enter room name">

12 <button id="join">Join</button>

13 <button id="start" disabled>Start</button>

14 <button id="stop" disabled>Stop</button>

15 </div>

16 <div id="videos"></div>

17 <script src="app.js"></script>

18</body>

19</html>

20This HTML update replaces the individual video elements with a single

div container that will hold multiple video elements dynamically.Handling Multiple Participants

Next, we will update our JavaScript code to manage multiple participants and their respective video streams.

[b] JavaScript for Managing Participants

Update your

app.js file to include logic for handling multiple participants:JavaScript

1let localStream;

2let peerConnection;

3const signalingServerUrl = "ws://127.0.0.1:3030/signaling";

4let signalingSocket;

5const remoteStreams = {};

6

7document.getElementById("join").addEventListener("click", async () => {

8 const room = document.getElementById("room").value;

9 if (!room) {

10 alert("Please enter a room name");

11 return;

12 }

13

14 signalingSocket = new WebSocket(signalingServerUrl);

15 signalingSocket.onopen = () => {

16 signalingSocket.send(JSON.stringify({ type: "join", room }));

17 };

18

19 signalingSocket.onmessage = async (message) => {

20 const data = JSON.parse(message.data);

21 switch (data.type) {

22 case "offer":

23 await handleOffer(data.offer, data.sender);

24 break;

25 case "answer":

26 await handleAnswer(data.answer);

27 break;

28 case "candidate":

29 await handleCandidate(data.candidate);

30 break;

31 default:

32 break;

33 }

34 };

35

36 localStream = await navigator.mediaDevices.getUserMedia({ video: true, audio: true });

37 addVideoStream("localVideo", localStream, true);

38

39 // Enable control buttons

40 document.getElementById("start").disabled = false;

41 document.getElementById("stop").disabled = false;

42});

43

44document.getElementById("start").addEventListener("click", () => {

45 startSession();

46});

47

48document.getElementById("stop").addEventListener("click", () => {

49 stopSession();

50});

51

52async function startSession() {

53 if (!peerConnection) {

54 peerConnection = createPeerConnection();

55 }

56 const offer = await peerConnection.createOffer();

57 await peerConnection.setLocalDescription(offer);

58 signalingSocket.send(JSON.stringify({ type: "offer", offer }));

59}

60

61function stopSession() {

62 if (peerConnection) {

63 peerConnection.close();

64 peerConnection = null;

65 }

66 localStream.getTracks().forEach(track => track.stop());

67 clearVideoStreams();

68 document.getElementById("start").disabled = true;

69 document.getElementById("stop").disabled = true;

70}

71

72async function handleOffer(offer, sender) {

73 peerConnection = createPeerConnection();

74 await peerConnection.setRemoteDescription(new RTCSessionDescription(offer));

75 const answer = await peerConnection.createAnswer();

76 await peerConnection.setLocalDescription(answer);

77 signalingSocket.send(JSON.stringify({ type: "answer", answer }));

78}

79

80function createPeerConnection() {

81 const pc = new RTCPeerConnection();

82 pc.onicecandidate = (event) => {

83 if (event.candidate) {

84 signalingSocket.send(JSON.stringify({ type: "candidate", candidate: event.candidate }));

85 }

86 };

87 pc.ontrack = (event) => {

88 const stream = event.streams[0];

89 const streamId = event.track.id;

90 if (!remoteStreams[streamId]) {

91 remoteStreams[streamId] = stream;

92 addVideoStream(streamId, stream);

93 }

94 };

95 localStream.getTracks().forEach(track => pc.addTrack(track, localStream));

96 return pc;

97}

98

99async function handleAnswer(answer) {

100 await peerConnection.setRemoteDescription(new RTCSessionDescription(answer));

101}

102

103async function handleCandidate(candidate) {

104 await peerConnection.addIceCandidate(new RTCIceCandidate(candidate));

105}

106

107function addVideoStream(id, stream, isLocal = false) {

108 const videoContainer = document.getElementById("videos");

109 const videoElement = document.createElement("video");

110 videoElement.id = id;

111 videoElement.srcObject = stream;

112 videoElement.autoplay = true;

113 if (isLocal) {

114 videoElement.muted = true;

115 }

116 videoContainer.appendChild(videoElement);

117}

118

119function clearVideoStreams() {

120 const videoContainer = document.getElementById("videos");

121 videoContainer.innerHTML = "";

122 Object.keys(remoteStreams).forEach(streamId => {

123 remoteStreams[streamId].getTracks().forEach(track => track.stop());

124 });

125 remoteStreams = {};

126}

127This JavaScript code includes the following updates:

- Remote Streams Management: A

remoteStreamsobject to manage multiple remote streams. - Handling Multiple Tracks: The

ontrackevent handler now creates a new video element for each remote stream and adds it to the DOM. - Dynamic Video Elements: The

addVideoStreamfunction dynamically creates and adds video elements to thevideoscontainer. - Clear Video Streams: The

clearVideoStreamsfunction clears all video elements and stops their tracks when the session is stopped.

Connecting and Managing Participants

With these changes, our application can now handle multiple participants by dynamically creating video elements for each participant's stream. The peer connection setup and signaling handling ensure that each participant's video and audio are correctly managed and displayed.

By implementing the participant view, you have enhanced the functionality of your WebRTC application to support multiple users in a session. The next step will cover running the application and troubleshooting common issues.

Step 6: Run Your Code Now

In this section, we will go through the steps required to run your Rust WebRTC.rs application. We will also cover troubleshooting common issues you might encounter during the setup and execution process.

Running the Application

[a] Start the Signaling Server

First, you need to start the signaling server. The signaling server handles the communication between peers and is essential for establishing WebRTC connections. Make sure your

src/main.rs includes the signaling server setup:Rust

1use warp::Filter;

2

3#[tokio::main]

4async fn main() {

5 // Create the signaling server routes

6 let routes = warp::path("signaling")

7 .and(warp::ws())

8 .map(|ws: warp::ws::Ws| {

9 ws.on_upgrade(handle_signaling)

10 });

11

12 // Start the signaling server

13 warp::serve(routes).run(([127, 0, 0, 1], 3030)).await;

14}

15

16// Handle signaling messages

17async fn handle_signaling(ws: warp::ws::WebSocket) {

18 // WebSocket handling code here...

19}

20Run the signaling server using Cargo:

sh

1cargo run

2This command compiles and runs your Rust application, starting the signaling server on

http://127.0.0.1:3030/signaling.[b] Serve the HTML and JavaScript Files

You need to serve the

index.html and app.js files to access the user interface. A simple way to do this is to use a static file server. You can use any server like http-server (Node.js) or Python's built-in HTTP server.Using Python's built-in HTTP server, navigate to the directory containing your

index.html file and run:sh

1python3 -m http.server 8000

2This command starts a server that serves files from the current directory at

http://localhost:8000.[c] Open the Application in a Browser

Open your browser and navigate to

http://localhost:8000. You should see the user interface of your WebRTC application.Testing the Application

Join a Room

- Enter a room name in the input field and click the "Join" button.

- This should connect you to the signaling server and enable the "Start" and "Stop" buttons.

Start the Session

- Click the "Start" button to initiate the WebRTC session.

- Your local video stream should appear on the screen.

Open Another Browser Window

- Open another browser window or tab and navigate to

http://localhost:8000. - Join the same room name as the first participant.

- Both participants should see each other's video streams.

Stop the Session

- Click the "Stop" button to end the WebRTC session.

- This should stop the video streams and clear the video elements.

Troubleshooting Common Issues

Here are some common issues you might encounter and how to troubleshoot them:

WebSocket Connection Errors

- Ensure the signaling server is running and accessible at

ws://127.0.0.1:3030/signaling. - Check the WebSocket URL in your

app.jsfile.

No Video or Audio Stream

- Ensure that your browser has permissions to access the camera and microphone.

- Check the console for any errors related to media devices.

ICE Candidate Errors

- Ensure that your network configuration allows peer-to-peer connections.

- Check the console for errors related to ICE candidates and ensure they are being exchanged properly.

Peer Connection Not Established

- Verify that the signaling messages (offer, answer, and ICE candidates) are being sent and received correctly.

- Check the order of signaling events to ensure the peer connection setup is followed correctly.

By following these steps, you should be able to run and test your Rust WebRTC.rs application successfully. The next section will cover the conclusion and provide answers to frequently asked questions (FAQs) to help you further understand and troubleshoot your WebRTC application.

Conclusion

In this comprehensive guide, we've walked through the process of building a real-time communication application using Rust and the WebRTC.rs library. By following the steps outlined in this article, you have learned how to set up your Rust environment, create a new WebRTC application, handle signaling, manage peer connections, and implement essential features such as join screens, control buttons, and participant views.

Rust WebRTC.rs offers a robust and efficient way to implement real-time communication applications, leveraging Rust's performance and safety features. Whether you are developing a video conferencing tool, a live streaming application, or any other peer-to-peer communication platform, Rust WebRTC.rs provides the tools you need to build a high-performance and reliable solution.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ