Introduction to LLM Voice Model

In the realm of artificial intelligence, the integration of speech and language understanding has seen transformative advancements in recent years. The rise of Large Language Models (LLMs) has not only changed the way machines process text, but also how they generate and interpret human-like speech. At the forefront of this evolution is the LLM voice model—a new paradigm that brings together the power of foundation models with sophisticated speech synthesis and recognition capabilities.

LLM voice models enable machines to understand, generate, and respond with highly natural, emotive, and context-aware speech. Their significance spans across AI research, accessibility, real-time human-computer interaction, and the development of intelligent virtual assistants. In 2025, these models are poised to redefine the boundaries of voice-based applications, offering unprecedented realism and interactivity.

What is an LLM Voice Model?

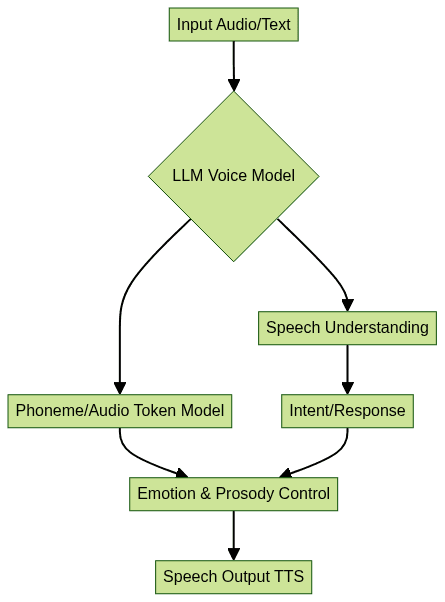

An LLM voice model is an advanced artificial intelligence system that leverages large language models for both the generation and comprehension of human speech. Unlike conventional Text-to-Speech (TTS) systems, which rely heavily on pre-scripted rules or basic neural networks, LLM-based voice models harness deep contextual understanding and multi-modal learning.

These models evolved from traditional TTS pipelines to embrace architectures capable of processing audio, text, and even visual cues. This leap allows for features like emotional control, persona-awareness, and end-to-end streaming. LLM voice models can synthesize speech with nuanced intonation, prosody, and emotion, enabling applications that require human-like interaction.

For developers looking to integrate advanced voice capabilities into their products, leveraging a

Voice SDK

can provide a robust foundation for building real-time, interactive audio experiences powered by LLM voice models.Key features include:

- Emotional and prosody control: Infuse speech with a wide range of emotions and expressive styles.

- Real-time, low-latency streaming: Support seamless, interactive conversations.

- Multi-modal input/output: Combine text, phoneme, and audio token processing for richer outputs.

- Contextual and persona-aware synthesis: Adapt speech to user roles, contexts, or even specific personas.

The shift towards LLM-driven voice models signals a new era in speech AI—one where the boundary between human and machine communication is increasingly blurred.

Key Innovations in LLM Voice Models

Emotional and Prosody Control

Traditional TTS systems struggled to deliver convincing emotional speech. With LLM voice models, deep learning architectures can interpret context and generate speech with fine-grained control over emotion, tone, and prosody. By conditioning output on explicit emotion tokens or context, these models enable expressive, empathetic, and dynamic interactions.

For businesses aiming to provide emotionally intelligent customer interactions, integrating a

phone call api

can help deliver seamless and context-aware voice communications powered by LLM voice models.Multi-Token Prediction and Speed

A core innovation is the move from next-token prediction (NTP) to multi-token prediction (MTP). Rather than generating one audio token at a time, models predict sequences in parallel, drastically improving synthesis speed.

1# Example: Multi-token prediction for voice LLM

2import torch

3from llm_voice_model import VoiceLLM

4

5model = VoiceLLM()

6input_text = "Hello, how can I help you today?"

7# Generate 8 tokens at each step for faster synthesis

8audio_tokens = model.generate(input_text, multi_token_steps=8)

9This technique enables real-time voice interaction, supporting applications like live translation or voice role-play AI. For those building interactive platforms, a

Live Streaming API SDK

can facilitate scalable, low-latency voice streaming experiences that leverage the speed of LLM voice models.End-to-End and Streaming Architectures

Modern LLM voice models are designed for end-to-end, full-duplex operation—meaning they can both comprehend and generate speech with minimal latency. These architectures integrate Automatic Speech Recognition (ASR), Text-to-Speech (TTS), and even speech understanding into a unified pipeline.

This approach enables natural, back-and-forth conversations, essential for next-generation virtual assistants and collaborative AI agents. Developers can further enhance their applications by using an

embed video calling sdk

to add seamless video and audio communication features alongside LLM-powered voice synthesis.Leading LLM Voice Model Architectures

EmoVoice, VocalNet, LLaMA-Omni2, Voila, SPIRIT LM

A variety of LLM voice model architectures have emerged, each with unique design philosophies and capabilities.

| Model | Unique Features | Emotional TTS | Streaming | Open Source | Persona-Aware |

|---|---|---|---|---|---|

| EmoVoice | Advanced emotional control, prosody tokens | Yes | Yes | No | Yes |

| VocalNet | Multi-modal, integrates ASR and TTS | Yes | Yes | Yes | No |

| LLaMA-Omni2 | Large-scale, multi-language, multi-token support | Yes | Partial | Yes | Yes |

| Voila | Real-time streaming, low-latency | Limited | Yes | No | No |

| SPIRIT LM | Persona-aware, open-source, role-play AI | Yes | Partial | Yes | Yes |

These architectures share a focus on flexibility, scalability, and realism. Some, like VocalNet and LLaMA-Omni2, are available as open-source projects—accelerating community-driven innovation. Others, like EmoVoice and SPIRIT LM, specialize in emotional and persona-aware synthesis, enabling highly engaging interactive experiences.

For those seeking to implement high-quality video and audio communication, leveraging a

Video Calling API

can complement LLM voice models by providing reliable, scalable conferencing capabilities.Open Source Ecosystem

The open-source ecosystem is rapidly expanding. Projects like

LLM-Voice provide codebases for training and deploying custom voice LLMs:1# Example: Loading a pre-trained open-source LLM voice model

2from llm_voice import load_pretrained_model

3model = load_pretrained_model("llm-voice-base-2025")

4Developers can fine-tune these models on domain-specific speech datasets for tailored applications. If you're working in Python, a

python video and audio calling sdk

can be integrated to enable robust, cross-platform communication features alongside your LLM voice solutions.Applications and Use Cases for LLM Voice Models

LLM voice models have unlocked a broad spectrum of applications:

- Virtual assistants: Delivering empathetic, conversational interactions in real time.

- Customer service bots: Handling calls and chats with emotional nuance and contextual awareness.

- Voice role-play AI: Powering interactive games, simulations, and training environments.

- Accessibility: Generating natural speech for screen readers, augmentative communication devices, and accessible interfaces.

- Real-time translation: Bridging language barriers with instant, expressive cross-lingual speech.

- Content creation: Assisting podcasters, YouTubers, and education platforms with lifelike voiceovers and narration.

For enterprises and developers, LLM voice models represent a leap forward in building engaging, personalized, and intelligent voice applications. To accelerate development, consider using a

Voice SDK

to quickly add advanced voice features to your products.Challenges and Considerations

Despite their promise, LLM voice models face several challenges:

- Latency: Achieving ultra-low response times for real-time use cases remains technically demanding.

- Data requirements: Training high-quality models requires vast, well-annotated voice datasets across languages and emotions.

- Emotion accuracy: Natural-sounding, contextually appropriate emotion is still a work in progress.

- Cross-lingual performance: Seamless voice generation and understanding across multiple languages is a complex frontier.

- Ethical issues: Risks include deepfake misuse, privacy violations, and bias in voice outputs, necessitating robust safeguards and transparency.

Ongoing research and community collaboration are critical to addressing these challenges as the technology matures. For teams aiming to overcome these hurdles, a

Voice SDK

can provide essential tools and infrastructure for building resilient voice AI applications.Getting Started: Building Your Own LLM Voice Model

Aspiring developers and researchers can experiment with LLM voice models using a range of open-source libraries and datasets. Notable resources include

LLM-Voice, ESPnet, and datasets like LibriTTS, VCTK, and Common Voice.Here's a simple example of running an LLM voice model:

1from llm_voice import VoiceLLM, load_tokenizer

2model = VoiceLLM.from_pretrained("llm-voice-base-2025")

3tokenizer = load_tokenizer("llm-voice-base-2025")

4text = "This is an example of LLM-based voice synthesis."

5audio = model.synthesize(tokenizer.encode(text), emotion="happy")

6with open("output.wav", "wb") as f:

7 f.write(audio)

8Tips:

- Fine-tune on your target domain for better results.

- Use diverse datasets to improve emotion and accent coverage.

- Experiment with different persona and emotion tokens for richer synthesis.

To get hands-on experience and explore the full potential of LLM voice models,

Try it for free

and start building your own intelligent voice applications today.Future Directions and Trends

Looking ahead to 2025 and beyond, LLM voice models will continue to evolve:

- Next-gen foundation models will unify text, speech, and vision for seamless multimodal interaction.

- Personalization and context-awareness will enable bespoke, emotionally intelligent voice agents.

- Ethical and privacy-focused designs will ensure responsible deployment at scale.

As hardware accelerates and datasets grow, expect even more lifelike, adaptive, and intelligent voice AI experiences. For those interested in staying at the forefront, integrating a

Voice SDK

will be key to unlocking new voice-driven possibilities.Conclusion

LLM voice models are redefining what's possible in speech AI, powering expressive, real-time, and context-aware interactions. As open-source tools and research advance, developers have unprecedented opportunities to build, customize, and deploy next-generation voice applications. The impact of LLM-based voice technology in 2025 will be profound—shaping the way we interact with machines and each other.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ