Introduction to AI Voice Agents in ai voice agent

AI voice agents are transforming the way we interact with technology. By leveraging advances in speech recognition, natural language processing, and real-time audio streaming, these agents can understand spoken queries and respond intelligently. In this tutorial, you will learn how to build a production-ready AI voice agent using Python and the VideoSDK AI Agents framework.

What is an AI Voice Agent?

An AI voice agent is a software system that can engage in spoken conversations with users. It listens to audio input, transcribes it to text, processes the text using a language model, generates a response, and then speaks the response back to the user. This creates a seamless, conversational experience.

Why are they important for the ai voice agent industry?

AI voice agents are crucial in industries such as customer support, healthcare, education, and smart devices. They automate repetitive tasks, provide instant assistance, and make technology more accessible. Their ability to handle natural language makes them invaluable for improving user engagement and satisfaction.

Core Components of a Voice Agent

A typical AI voice agent consists of:

- Speech-to-Text (STT): Converts spoken words into text.

- Voice

Activity Detection

(VAD): Detects when the user is speaking. - Turn Detection: Determines when it's the agent's turn to respond.

- Large Language Model (LLM): Processes the transcribed text and generates a response.

- Text-to-Speech (TTS): Converts the response text back to audio.

For a more detailed explanation of each part, check out the

AI voice Agent core components overview

.What You'll Build in This Tutorial

You will build a fully functional AI voice agent using Python and VideoSDK. The agent will handle real-time conversations, leveraging state-of-the-art plugins for each component. By the end, you'll have a working agent you can test and extend. If you're eager to get started quickly, refer to the

Voice Agent Quick Start Guide

for a streamlined setup.Architecture and Core Concepts

High-Level Architecture Overview

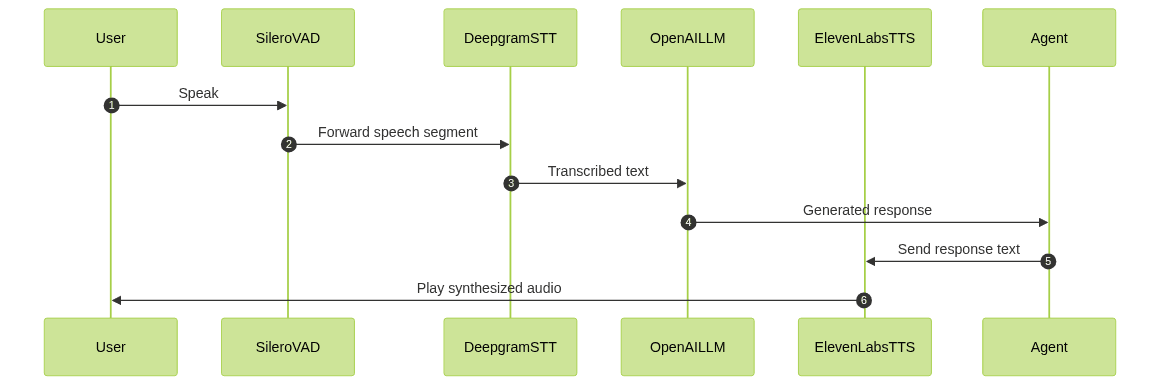

Let's visualize the data flow in our AI voice agent using a UML sequence diagram:

This diagram shows how user speech is processed through various components before a spoken response is delivered. The

cascading pipeline in AI voice Agents

is central to this process, as it orchestrates the flow between STT, LLM, TTS, VAD, and turn detection.Understanding Key Concepts in the VideoSDK Framework

- Agent: The core logic that determines how the agent responds. You'll subclass this to define your agent's behavior.

- CascadingPipeline: Chains together STT, VAD, Turn Detection, LLM, and TTS plugins for seamless processing.

- VAD (Voice Activity Detection): Detects when the user starts and stops speaking. Here, we use SileroVAD.

- TurnDetector: Determines conversational turns, ensuring the agent responds at the right moment. Learn more about the

Turn detector for AI voice Agents

and how it improves conversational flow. - AgentSession: Manages the lifecycle of the conversation. For details on session management, see

AI voice Agent Sessions

. - ConversationFlow: Orchestrates the dialogue between the user and the agent. Explore how

conversation flow in AI voice Agents

is structured for dynamic interactions.

Setting Up the Development Environment

Before diving into code, let's set up everything you need.

Prerequisites (Python 3.11+, VideoSDK Account)

- Python 3.11+ is required for compatibility with the VideoSDK AI Agents framework.

- VideoSDK Account: Sign up for a free account to access API keys and the dashboard.

Step 1: Create a Virtual Environment

It's best practice to use a virtual environment to manage dependencies.

1python3.11 -m venv venv

2source venv/bin/activate

3Step 2: Install Required Packages

Install the VideoSDK AI Agents SDK and plugin dependencies:

1pip install videosdk-agents videosdk-plugins-silero videosdk-plugins-turn-detector videosdk-plugins-deepgram videosdk-plugins-openai videosdk-plugins-elevenlabs

2Step 3: Configure API Keys in a .env file

Create a

.env file in your project directory and add your API keys:1VIDEOSDK_API_KEY=YOUR_VIDEOSDK_API_KEY

2DEEPGRAM_API_KEY=YOUR_DEEPGRAM_API_KEY

3OPENAI_API_KEY=YOUR_OPENAI_API_KEY

4ELEVENLABS_API_KEY=YOUR_ELEVENLABS_API_KEY

5Replace the placeholders with your actual keys from the respective dashboards.

Building the AI Voice Agent: A Step-by-Step Guide

Let's walk through the process of building your AI voice agent.

First, here is the complete, runnable code for your agent:

1import asyncio, os

2from videosdk.agents import Agent, AgentSession, CascadingPipeline, JobContext, RoomOptions, WorkerJob, ConversationFlow

3from videosdk.plugins.silero import SileroVAD

4from videosdk.plugins.turn_detector import TurnDetector, pre_download_model

5from videosdk.plugins.deepgram import DeepgramSTT

6from videosdk.plugins.openai import OpenAILLM

7from videosdk.plugins.elevenlabs import ElevenLabsTTS

8from typing import AsyncIterator

9

10# Pre-downloading the Turn Detector model

11pre_download_model()

12

13agent_instructions = "You are an AI Voice Agent designed to assist users with a wide range of general inquiries and tasks in a friendly, professional, and efficient manner. Your persona is that of a knowledgeable and approachable digital assistant, always eager to help and provide clear, concise information.

14

15Capabilities:

16- Answer general questions about AI voice agents, their features, and use cases.

17- Guide users through basic troubleshooting steps for common voice agent issues.

18- Provide information about integrating AI voice agents into various platforms and workflows.

19- Offer tips on optimizing user experience with AI voice agents.

20- Assist with scheduling, reminders, and simple task management if requested.

21

22Constraints and Limitations:

23- Do not provide personal opinions or make unsupported claims about AI technology.

24- Do not offer legal, medical, or financial advice; always recommend consulting a qualified professional for such matters.

25- Do not collect or store any personal or sensitive user data.

26- If unsure about an answer, politely acknowledge the limitation and suggest seeking further assistance from official documentation or support channels.

27- Always maintain user privacy and adhere to ethical guidelines in all interactions."

28

29class MyVoiceAgent(Agent):

30 def __init__(self):

31 super().__init__(instructions=agent_instructions)

32 async def on_enter(self): await self.session.say("Hello! How can I help?")

33 async def on_exit(self): await self.session.say("Goodbye!")

34

35async def start_session(context: JobContext):

36 # Create agent and conversation flow

37 agent = MyVoiceAgent()

38 conversation_flow = ConversationFlow(agent)

39

40 # Create pipeline

41 pipeline = CascadingPipeline(

42 stt=DeepgramSTT(model="nova-2", language="en"),

43 llm=OpenAILLM(model="gpt-4o"),

44 tts=ElevenLabsTTS(model="eleven_flash_v2_5"),

45 vad=SileroVAD(threshold=0.35),

46 turn_detector=TurnDetector(threshold=0.8)

47 )

48

49 session = AgentSession(

50 agent=agent,

51 pipeline=pipeline,

52 conversation_flow=conversation_flow

53 )

54

55 try:

56 await context.connect()

57 await session.start()

58 # Keep the session running until manually terminated

59 await asyncio.Event().wait()

60 finally:

61 # Clean up resources when done

62 await session.close()

63 await context.shutdown()

64

65def make_context() -> JobContext:

66 room_options = RoomOptions(

67 # room_id="YOUR_MEETING_ID", # Set to join a pre-created room; omit to auto-create

68 name="VideoSDK Cascaded Agent",

69 playground=True

70 )

71

72 return JobContext(room_options=room_options)

73

74if __name__ == "__main__":

75 job = WorkerJob(entrypoint=start_session, jobctx=make_context)

76 job.start()

77Now, let's break down the code step by step.

Step 4.1: Generating a VideoSDK Meeting ID (curl example)

Before running your agent, you'll need a meeting ID. You can generate one using the VideoSDK API:

1curl -X POST \

2 -H "Authorization: YOUR_VIDEOSDK_API_KEY" \

3 -H "Content-Type: application/json" \

4 -d '{"region": "sg001"}' \

5 https://api.videosdk.live/v2/rooms

6Replace

YOUR_VIDEOSDK_API_KEY with your actual key. The response will include a roomId you can use.Step 4.2: Creating the Custom Agent Class

The agent's behavior is defined in a custom class that inherits from

Agent. Let's look at that part of the code:1class MyVoiceAgent(Agent):

2 def __init__(self):

3 super().__init__(instructions=agent_instructions)

4 async def on_enter(self):

5 await self.session.say("Hello! How can I help?")

6 async def on_exit(self):

7 await self.session.say("Goodbye!")

8- The agent is initialized with a detailed instruction prompt.

on_enterandon_exitprovide greetings and farewells to the user.

Step 4.3: Defining the Core Pipeline

The pipeline chains together all the plugins for speech, language, and audio processing:

1pipeline = CascadingPipeline(

2 stt=DeepgramSTT(model="nova-2", language="en"),

3 llm=OpenAILLM(model="gpt-4o"),

4 tts=ElevenLabsTTS(model="eleven_flash_v2_5"),

5 vad=SileroVAD(threshold=0.35),

6 turn_detector=TurnDetector(threshold=0.8)

7)

8- STT: Deepgram's Nova-2 for accurate English transcription. For more on integrating Deepgram, see the

Deepgram STT Plugin for voice agent

. - LLM: OpenAI's GPT-4o for intelligent responses. Learn about the

OpenAI LLM Plugin for voice agent

to unlock advanced conversational abilities. - TTS: ElevenLabs for natural-sounding speech; see the

ElevenLabs TTS Plugin for voice agent

for more details. - VAD: SileroVAD detects when the user is speaking.

- TurnDetector: Ensures the agent responds at the right time.

Step 4.4: Managing the Session and Startup Logic

Session management ensures the agent joins the meeting, starts the conversation, and handles cleanup:

1async def start_session(context: JobContext):

2 agent = MyVoiceAgent()

3 conversation_flow = ConversationFlow(agent)

4 pipeline = CascadingPipeline(

5 stt=DeepgramSTT(model="nova-2", language="en"),

6 llm=OpenAILLM(model="gpt-4o"),

7 tts=ElevenLabsTTS(model="eleven_flash_v2_5"),

8 vad=SileroVAD(threshold=0.35),

9 turn_detector=TurnDetector(threshold=0.8)

10 )

11 session = AgentSession(

12 agent=agent,

13 pipeline=pipeline,

14 conversation_flow=conversation_flow

15 )

16 try:

17 await context.connect()

18 await session.start()

19 await asyncio.Event().wait()

20 finally:

21 await session.close()

22 await context.shutdown()

23- The session is started and kept alive until you manually stop it (Ctrl+C).

- Graceful shutdown ensures resources are released.

The

make_context() function sets up the meeting room options, including the playground flag for easy testing:1def make_context() -> JobContext:

2 room_options = RoomOptions(

3 name="VideoSDK Cascaded Agent",

4 playground=True

5 )

6 return JobContext(room_options=room_options)

7Running and Testing the Agent

Step 5.1: Running the Python Script

Start your agent with:

1python main.py

2The script will print a "playground" URL in the console. This lets you join the meeting as a user and test the agent in real time.

Step 5.2: Interacting with the Agent in the Playground

- Open the playground URL in your browser.

- Join the meeting room.

- Speak into your microphone; the agent will listen, process your query, and respond aloud.

- To stop the agent, press Ctrl+C in your terminal. This triggers a graceful shutdown.

Advanced Features and Customizations

Extending Functionality with Custom Tools

You can add custom tools (function_tool) to extend your agent's capabilities, such as integrating with external APIs or databases. Define new methods in your agent class and register them as tools.

Exploring Other Plugins (STT/LLM/TTS options)

- STT: Try Cartesia for best accuracy or Rime for low-cost transcription.

- TTS: ElevenLabs offers top quality, but Deepgram TTS is cost-effective.

- LLM: Use Google Gemini for alternative conversational AI.

Swap out plugins in the pipeline to experiment with different providers and models.

Troubleshooting Common Issues

API Key and Authentication Errors

- Double-check your

.envfile for typos. - Ensure all required API keys are present and valid.

Audio Input/Output Problems

- Verify your microphone and speakers are working.

- Check browser permissions if using the playground.

Dependency and Version Conflicts

- Use Python 3.11+ and install packages in a fresh virtual environment.

- Run

pip freezeto check installed versions.

Conclusion

You've built a fully functional, production-ready AI voice agent using Python and VideoSDK. This agent can handle real-time conversations and is easily extensible with custom tools and plugins. Explore the VideoSDK documentation to unlock more advanced features, and consider deploying your agent to serve real users.

Happy building!

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ