Build with VideoSDK’s AI Agents and Get $20 Free Balance!

Integrate voice into your apps with VideoSDK's AI Agents. Connect your chosen LLMs & TTS. Build once, deploy across all platforms.

Start BuildingOverview

Huggingface is a premier open-source platform revolutionising access to artificial intelligence (AI) and machine learning (ML), with a strong focus on natural language processing (NLP). The platform provides an extensive ecosystem comprising pre-trained models, user-friendly APIs, and a collaborative hub. Developers, researchers, and organisations can easily build, deploy, and share ML models across various modalities. Huggingface hosts over 100,000 models, supporting global innovation and powering thousands of production AI systems. Key offerings include advanced libraries, model repositories, interactive web app creation, and secure infrastructure, all aimed at democratising cutting-edge AI.

How It Works

- Models: Access and fine-tune a wide range of pre-trained models for NLP, computer vision, audio, and more, each with detailed model cards.

- Datasets: Utilise the Datasets Library to load, stream, and manage petabyte-scale datasets efficiently, or upload your own data.

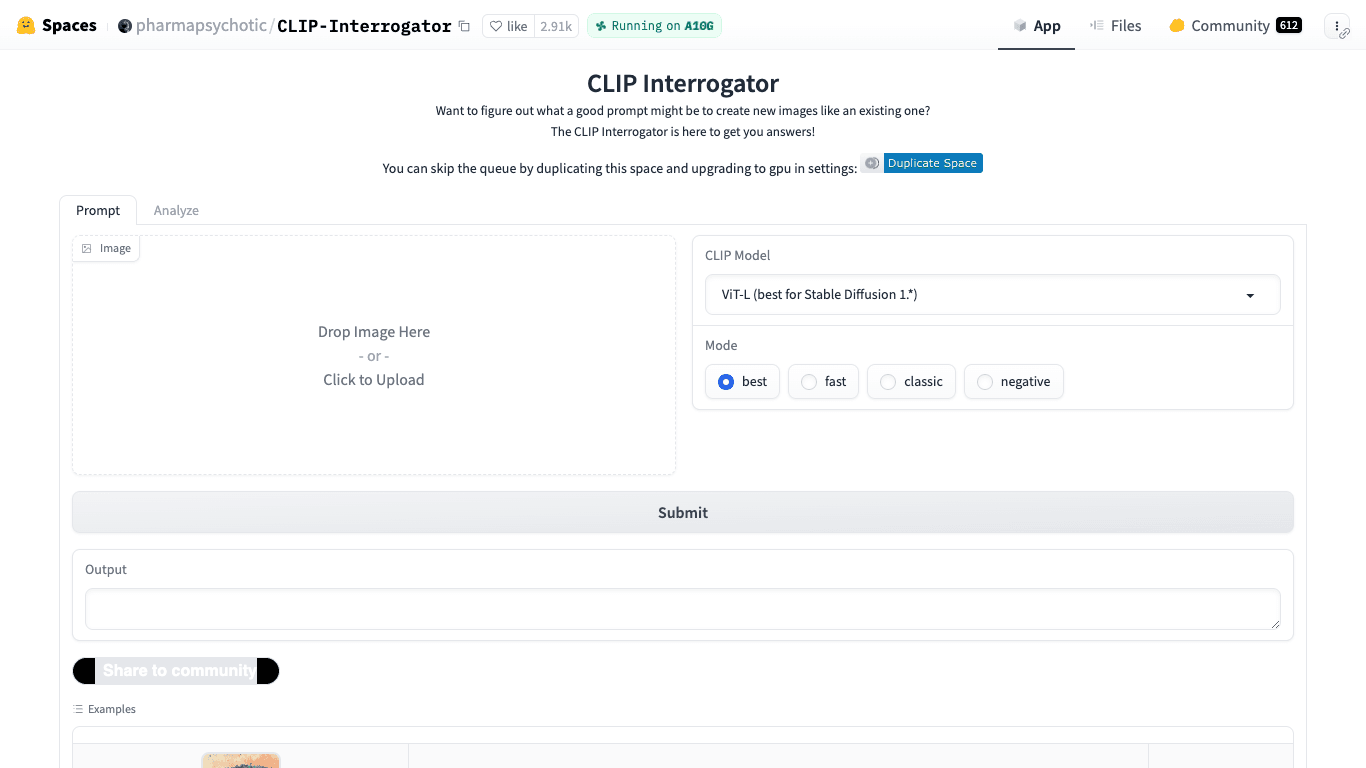

- Spaces: Launch interactive web apps (Gradio, Streamlit, Docker) directly from Git, with built-in version control, GPU support, and collaborative features.

- Transformers Library: Unified APIs for leading models (BERT, LLaMA, Mixtral) supporting PyTorch, TensorFlow, and JAX.

- Hugging Face Hub: Central registry for models and datasets with deep integrations and seamless Git-like workflow for repository management.

- Inference Endpoints: Deploy models on secure, dedicated infrastructure, enabling autoscaling production ML deployments.

Use Cases

Customer Service Automation

Automate filtering and classification of customer messages with NLP, enabling targeted responses for urgent or unsatisfied customers.

Conversational AI & Chatbots

Build advanced chatbots and voice assistants capable of dynamic, human-like interactions for enhanced customer experiences.

Healthcare & Life Sciences AI

Empower generative AI solutions for analysing medical and genomics data, supporting research and model development in healthcare.

Features & Benefits

- Extensive Model Hub: Thousands of pre-trained models for diverse use cases (summarisation, translation, sentiment analysis, etc.)

- Open-Source Ecosystem: Powerful libraries (Transformers, Datasets) and community collaboration.

- Multi-Modality Support: Work with text, images, video, audio, and 3D data.

- Collaborative Platform: Host and contribute to public models, datasets, and applications.

- Persistent Storage: Scale up storage for models and datasets with flexible options.

- AI Democratisation: Tools and resources accessible to everyone, from beginners to experts.

- Hugging Face Spaces: Easily share and launch interactive ML applications with customisable hardware.

- Inference Providers: Unified access to models via serverless inference partners for reliable deployment.

- Enterprise Solutions: Security, access control, dedicated support for organisational needs.

- Flexible Compute Options: On-demand CPUs and GPUs, from basic to high-performance hardware.

Target Audience

- Developers: Building and deploying AI/ML-powered applications.

- AI Researchers: Conducting research in NLP, ML, and computer vision.

- Data Scientists: Focused on data analysis and predictive modelling.

- Organisations: Including enterprises, universities, and non-profits (Apple, Meta, Microsoft, Google, NASA, NVIDIA, Bloomberg).

- Beginners & Experts: Accessible for newcomers yet robust for advanced users.

Pricing

- HF Hub Plan:

- Unlimited models, datasets, Spaces, organisations, private repos, and open-source resources.

- Spaces Hardware:

- CPU Basic (FREE; 2 vCPU, 16 GB Memory)

- CPU Upgrade ($0.03/hour; 8 vCPU, 32 GB Memory)

- Nvidia T4 small ($0.40/hour) to Nvidia A100 large ($2.50/hour), with many GPU configurations.

- Custom hardware options available on demand.

- Spaces Persistent Storage:

- Small (20 GB): $5/month

- Medium (150 GB): $25/month

- Large (1 TB): $100/month

- Inference Endpoints:

- Dedicated starting at $0.033/hour; secure and autoscaling.

- Inference Providers (API):

- Free users: monthly credits (<$0.10)

- PRO users: $2.00 credits/month, and PAYG at provider rates after credits

- Enterprise: $2.00 per seat (shared)

- Pro Account Plan: PRO badge, increased free API/AutoTrain, early feature access.

- Community GPU Grants: Available for side projects.

FAQs

How can I see what dataset was used to train a model?

The model uploader specifies the training dataset. If available on the Hugging Face Hub, datasets may appear as a linked card in the model card’s metadata and on the right side of the model page.

How can I see an example of a model in action?

Most models include inference widgets for in-browser testing. Also, Hugging Face Spaces feature interactive demos, which are typically linked on the model page.

How do I upload an update or new version of a model?

To update a published model, push a new commit to your model’s repository, just like the initial upload. Old versions remain in commit history.

What if I have a different checkpoint of the model trained on a different dataset?

Upload new checkpoints trained on other datasets as separate model repositories. Use model card metadata, Collections, or card links to reference related models.

What are Hugging Face Generative AI Services (HUGS)?

HUGS are optimised inference microservices built on open-source Hugging Face tech, simplifying and accelerating AI applications with open models through industry-standardised APIs.

Build with VideoSDK’s AI Agents and Get $20 Free Balance!

Integrate voice into your apps with VideoSDK's AI Agents. Connect your chosen LLMs & TTS. Build once, deploy across all platforms.

Start Building