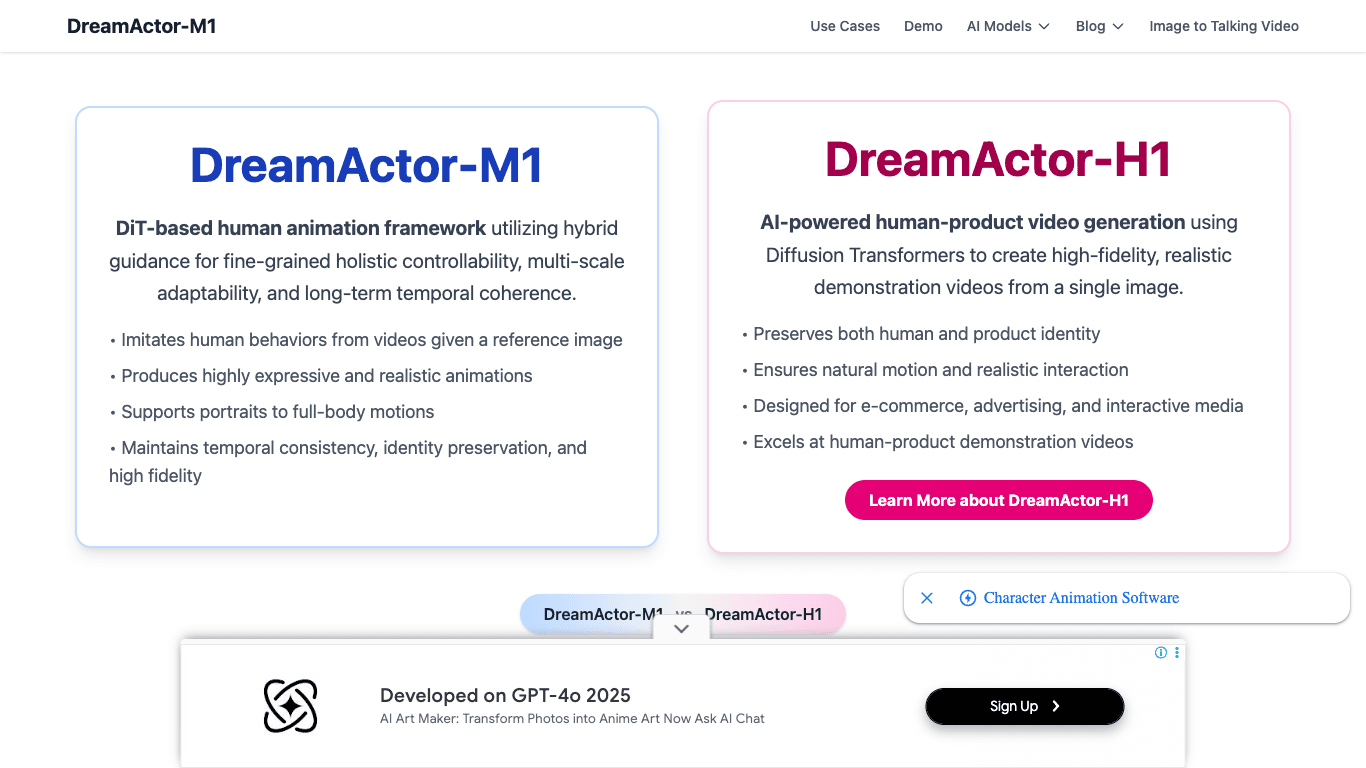

Dreamactor-m1

AI Human Animation: Precise Control, Multi-Scale Adaptability, Temporal Coherence.

4.2

Build with VideoSDK’s AI Agents and Get $20 Free Balance!

Integrate voice into your apps with VideoSDK's AI Agents. Connect your chosen LLMs & TTS. Build once, deploy across all platforms.

Start BuildingOverview

Dreamactor-m1 is a cutting-edge Diffusion Transformer (DiT) based framework revolutionising human animation. It addresses key limitations in existing image-based human animation methods by offering fine-grained holistic controllability, multi-scale adaptability, and long-term temporal coherence. Given a single reference image, Dreamactor-m1 can imitate human behaviours from driving videos, producing highly expressive and realistic animations. The framework seamlessly handles various scales, from detailed portraits to full-body motions, ensuring temporal consistency, identity preservation, and high fidelity in all generated videos. Its innovative approach features hybrid motion guidance, progressive multi-scale adaptation, and enhanced temporal coherence through integrated sequential motion patterns.

How It Works

- Reference Image Processing: The framework interpolates the initial reference image and extracts essential body skeletons and head spheres from the driving video frames.

- Pose Encoding: The extracted skeletal data is encoded into a compact pose latent vector using a dedicated pose encoder.

- Latent Combination: This pose latent vector is combined with a noised video latent, obtained via 3D VAE encoding.

- Facial Expression Encoding: A separate face motion encoder captures implicit facial representations, ensuring detailed facial movements.

- Multi-Scale Training: Reference images sampled from videos provide additional appearance details, enhancing realism and robustness.

- DiT Processing: The core Diffusion Transformer (DiT) model refines the video latent through Face Attention, Self-Attention, and Reference Attention.

- Supervision & Refinement: The denoised video latent is supervised against the original encoded video latent, guaranteeing high-quality, consistent outputs.

Use Cases

Portrait Animation

Create expressive facial animations with precise emotion control for close-up character interactions.

Full Body Motion

Generate seamless full-body movement transfers while preserving identity for dynamic scenes.

Audio Sync

Achieve realistic multi-language lip-sync in animated characters for dialogue and performances.

Filmmaking & Social Content

Transform static images into lively, animated clips for film production and engaging social media posts.

Features & Benefits

- Hybrid motion guidance with implicit facial and skeletal data

- Audio-driven facial animation for precise lip-sync

- Partial motion transfer (face/head/body)

- Shape-aware adaptation with intelligent bone length adjustments

- Multi-scale adaptability from portraits to full-body

- Enhanced temporal coherence for long-term consistency

- High fidelity and identity preservation in results

- Multi-angle support for diverse viewing perspectives

- Supports diverse character and motion styles

Target Audience

- Animators: Advanced tools for realistic character animation

- Content Creators: Video content for social media and marketing

- Filmmakers: Effortless character animation from single images

- Game Developers: High-fidelity in-game character motion

- Educators & VR Developers: Immersive avatars for virtual environments

- AI Enthusiasts: Exploring generative AI and human motion synthesis

Pricing

Information not available.

FAQs

What is DreamActor-M1?

DreamActor-M1 is a Diffusion Transformer (DiT) based framework designed for holistic, expressive, and robust human image animation using hybrid guidance. It can generate realistic videos from a reference image, mimicking human behaviours from driving videos across various scales.

How Does DreamActor-M1 Work?

It uses a DiT model with hybrid control signals, including implicit facial representations, 3D head spheres, and 3D body skeletons, for precise motion guidance. A progressive training strategy handles different scales, and integrated appearance guidance ensures long-term temporal coherence.

What are the Key Features of DreamActor-M1?

Key features include fine-grained holistic controllability, multi-scale adaptability (from portrait to full-body), long-term temporal coherence, identity preservation, high fidelity, audio-driven animation support, partial motion transfer, and shape-aware adaptation.

Can I see Examples of DreamActor-M1's capabilities?

Yes, please check the Video Demonstration and the examples provided in the Features and Comparison sections on our website.

Build with VideoSDK’s AI Agents and Get $20 Free Balance!

Integrate voice into your apps with VideoSDK's AI Agents. Connect your chosen LLMs & TTS. Build once, deploy across all platforms.

Start Building