Build with VideoSDK’s AI Agents and Get $20 Free Balance!

Integrate voice into your apps with VideoSDK's AI Agents. Connect your chosen LLMs & TTS. Build once, deploy across all platforms.

Start BuildingOverview

Avian delivers industry-leading AI solutions with a focus on speed, privacy, and enterprise-grade performance. Through its lightning-fast AI inference API and robust Generative BI platform, Avian enables enterprises to deploy any HuggingFace LLM at up to 3-10x faster speeds, process large datasets without data storage, and uncover actionable insights efficiently. Built on optimised NVIDIA B200 and H200 GPUs and hosted via secure Microsoft Azure infrastructure, Avian provides OpenAI-compatible endpoints, compliance with top standards, and a scalable solution trusted by industry leaders such as Google, Salesforce, and Boeing.

How It Works

- For AI Inference API:

- Change the

base_urlin your OpenAI client tohttps://api.avian.io/v1. - Select your preferred open-source model, such as DeepSeek-R1 or Meta Llama models.

- Avian's optimised infrastructure automatically handles model optimisation and scaling for high-performance inference.

- Change the

- For Generative BI:

- Private LLMs (Crimson MoE 70b, Mirage 34b) are trained securely on Azure.

- Each platform is supported by expert LLM agents.

- Live queries analyse your data, extracting thousands of metrics and dimensions without storing any data.

- Use natural language to explore and analyse data.

- General:

- Powered by the latest NVIDIA H200 SXM and B200 GPUs using speculative decoding for industry-leading speeds and low latency.

- Offers dedicated GPU instances for deploying custom HuggingFace models.

Use Cases

High-Performance AI Applications

Empower real-time chatbots, content generation platforms, and demanding AI services with Avian's ultra-fast inference API.

Business Intelligence & Data Analytics

Leverage Generative BI to extract actionable insights and create in-depth reports by analysing large datasets with natural language queries.

Large Language Model Deployment

Transform any HuggingFace model into a reliable, scalable API endpoint for diverse enterprise and developer applications.

Features & Benefits

- Fastest AI Inference (e.g., 351 tokens/sec on DeepSeek R1)

- Optimised LLM Deployment (3-10x faster inference)

- OpenAI-Compatible API with seamless integration

- No Rate Limits for unrestricted API access

- Lowest Latency (0.27s TTFT for Llama 405B)

- Scalability for billions of requests via NVIDIA GPUs

- Enterprise-Grade Performance & Privacy on SOC/2 Azure infrastructure

- Comprehensive Compliance (GDPR, CCPA, SOC/2)

- Fine-tuning Capabilities for custom AI models

- Native Tool Calling to enhance model abilities and connect APIs

- Generative BI for Enterprise insights and actions

- Rapid Insights (up to 92% faster time-to-insight)

- Extensive Data Source Integration (Spreadsheets, Shopify, Google Analytics, Google Ads, Facebook, etc.)

Target Audience

- Enterprise Organisations:

- Companies needing generative BI platforms and fast AI inference (e.g., Google, Salesforce, Boeing, Bank of America, eBay, Intel, General Motors, Omnicom Group).

- Data Analysts:

- Professionals extracting actionable insights from large datasets.

- Business Executives:

- Leaders making decisions from AI-driven insights.

- IT Professionals:

- Teams deploying and securing AI infrastructure.

- Content Creators:

- Users enhancing marketing by analysing audience data.

- Financial Analysts:

- Experts predicting market trends using AI.

- Developers & AI Engineers:

- Those integrating scalable, fast, OpenAI-compatible APIs.

Pricing

- API Model Pricing (per million tokens):

- Meta Llama 3.1 405B Instruct (Enterprise, ~130 tok/s): £1.50 Input / £1.50 Output

- Meta Llama 3.3 70B Instruct (Professional, ~200 tok/s): £0.45 Input / £0.45 Output

- Meta Llama 3.1 8B Instruct (Starter, ~450 tok/s): £0.10 Input / £0.10 Output

- Dedicated GPU Instances (billed by the second):

- H200 SXM (141GB HBM3): From £0.00208

- H100 SXM (80GB HBM3): From £0.00139

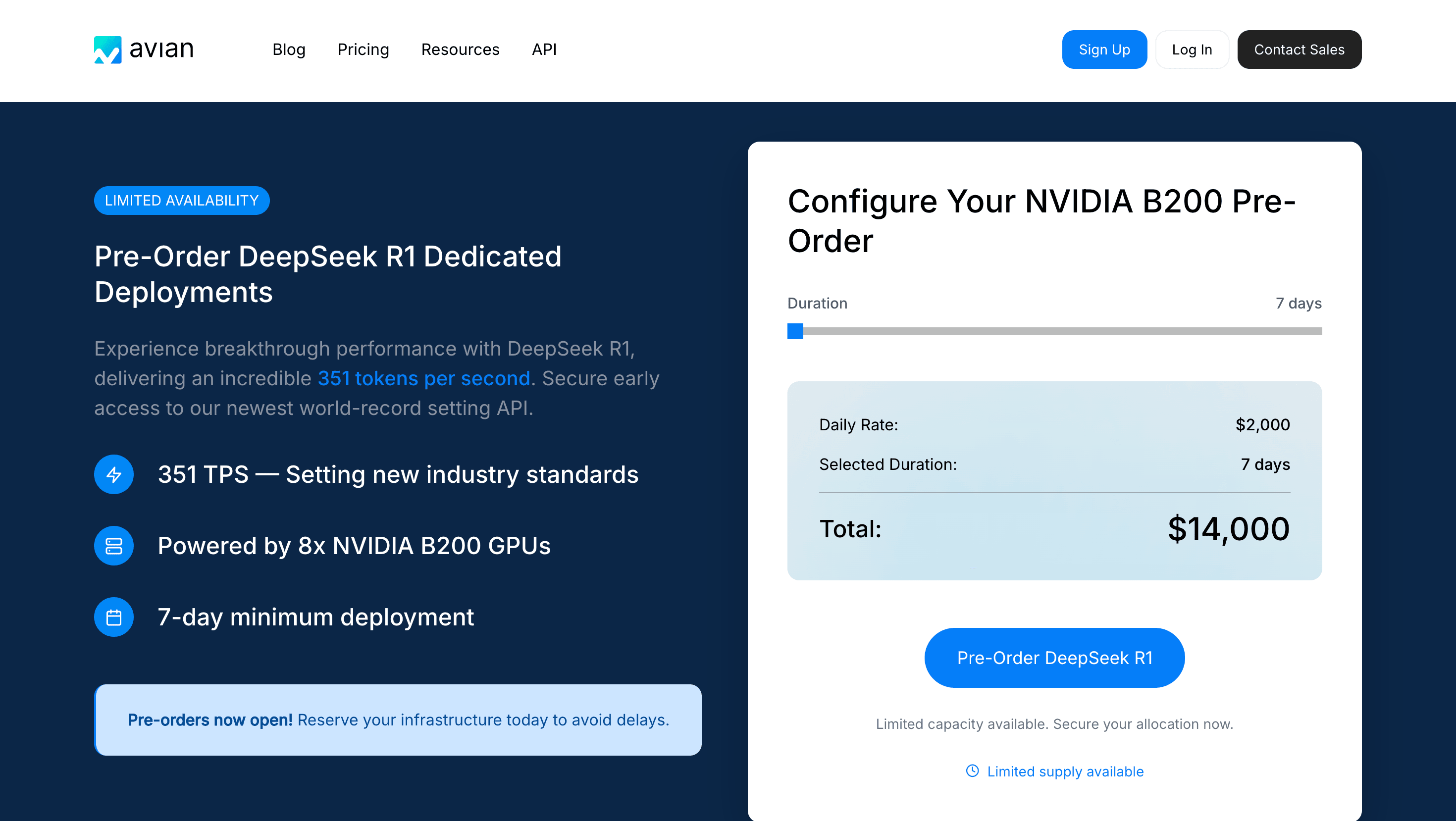

- DeepSeek R1 Dedicated Deployments:

- Powered by 8x NVIDIA B200 GPUs, 351 tokens/sec

- Daily Rate: £2,000; 7-day minimum: £14,000

- Trial Offers:

- £1 free credit for API sign-up

- 7-day free trial for Generative BI (no credit card required)

Build with VideoSDK’s AI Agents and Get $20 Free Balance!

Integrate voice into your apps with VideoSDK's AI Agents. Connect your chosen LLMs & TTS. Build once, deploy across all platforms.

Start Building