Introduction to OpenAI Realtime Agents

OpenAI realtime agents are advanced artificial intelligence agents designed to operate, learn, and make decisions in dynamic, fast-changing environments. Unlike traditional AI systems that process data in batches or static settings, OpenAI realtime agents interact continuously with their environment, making split-second decisions that adapt to new information as it arrives. These agents are foundational to modern AI applications such as gaming, robotics, autonomous vehicles, and large-scale simulations, where real-time responsiveness and adaptability are critical.

The importance of OpenAI realtime agents is evident in the growing demand for AI-powered systems capable of managing complex, unpredictable scenarios. Their ability to handle real-world uncertainties and perform under stringent latency constraints has enabled breakthroughs in reinforcement learning, multi-agent systems, and self-play environments. As AI continues to evolve, OpenAI realtime agents are setting new standards for intelligence in dynamic domains.

Fundamentals of Realtime Agent Environments

What sets a 'realtime' environment apart is its requirement for immediate, context-sensitive responses. OpenAI realtime agents must continually perceive, reason, and act without significant delay, often in environments where the state is constantly changing. This stands in stark contrast to classical agent settings, where decision cycles may be slower, and environments are often turn-based or static.

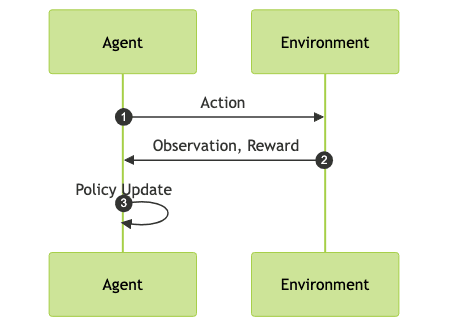

A key underpinning of these environments is the Markov Decision Process (MDP), which provides a mathematical framework for modeling decision-making under uncertainty. In an MDP, an agent perceives a state, takes an action, receives a reward, and transitions to a new state. For OpenAI realtime agents, this loop occurs at a high frequency, with each step potentially impacting the future trajectory of the environment and the agent’s long-term success.

Realtime AI agents also face challenges unique to dynamic settings, such as partial observability, non-stationary dynamics, and the need for robust policy optimization. The balance between exploration and exploitation becomes more delicate, as every action can have immediate and far-reaching consequences. This makes the design and training of OpenAI realtime agents both an art and a science.

OpenAI’s Approach to Realtime Agents

OpenAI has pioneered the development of sophisticated realtime agents through landmark projects such as OpenAI Five (competitive Dota 2 bots), Hide-and-Seek (emergent tool use in simulated environments), and Neural MMO (massively multi-agent online environments). Each of these showcases how OpenAI realtime agents can achieve coordination, adaptability, and emergent behavior at scale.

A central theme in OpenAI’s approach is the use of self-play and autocurricula. Agents learn by competing or cooperating with copies of themselves or other agents, continuously generating new challenges and driving emergent complexity. This method allows OpenAI realtime agents to surpass human-designed curricula, discovering innovative strategies that would be difficult to engineer manually.

Scalability is achieved through distributed training infrastructure, where large batch sizes and powerful compute clusters enable agents to process vast numbers of environment interactions per second. By leveraging asynchronous inference and distributed rollout workers, OpenAI realtime agents can efficiently explore the environment, collect experience, and optimize their policies on the fly.

Core Algorithms for OpenAI Realtime Agents

Reinforcement learning (RL) algorithms form the backbone of OpenAI realtime agents. In RL, agents learn to maximize cumulative rewards by interacting with an environment. Several algorithms have proven effective in real-time settings, each with unique trade-offs for scalability, latency, and stability.

The Real-Time Actor-Critic (RTAC) algorithm is specifically designed for low-latency environments. It combines the speed of synchronous updates with the flexibility of asynchronous experience collection, making it suitable for OpenAI realtime agents where response time is paramount. Conversely, the Soft Actor-Critic (SAC) algorithm introduces entropy regularization, encouraging exploration and robustness in environments with complex dynamics.

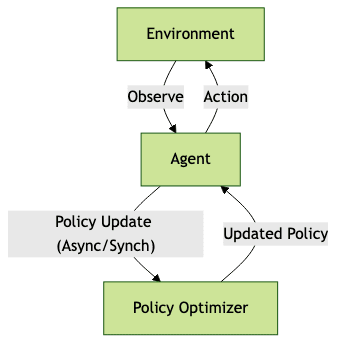

A critical distinction in deployment is between synchronous and asynchronous agents. Synchronous agents wait for all actions to complete before proceeding, ensuring consistency but potentially increasing latency. Asynchronous agents, on the other hand, process environment steps independently, reducing wait times and improving throughput at the cost of increased system complexity.

The agent-environment-policy loop above highlights how OpenAI realtime agents balance inference and learning, with both synchronous and asynchronous pathways.

Basic Agent Policy Loop (Python Example)

Below is a simplified policy loop for an OpenAI realtime agent, illustrating continuous environment interaction and policy optimization:

1import numpy as np

2

3class RealtimeAgent:

4 def __init__(self, policy):

5 self.policy = policy

6

7 def act(self, state):

8 return self.policy(state)

9

10 def run(self, env, steps):

11 state = env.reset()

12 for _ in range(steps):

13 action = self.act(state)

14 next_state, reward, done, _ = env.step(action)

15 self.update_policy(state, action, reward, next_state)

16 state = next_state

17 if done:

18 state = env.reset()

19

20 def update_policy(self, state, action, reward, next_state):

21 # Implement policy optimization (e.g., actor-critic update)

22 pass

23Tackling Latency and Scalability in OpenAI Realtime Agents

Latency and scalability are major hurdles for deploying OpenAI realtime agents in production environments. Inference time—the delay between observation and action—directly impacts agent performance in real-time scenarios such as robotics or competitive games. Additionally, as environments grow in complexity, the computational demands for training and inference rise sharply.

To address these challenges, OpenAI utilizes asynchronous compute architectures. By decoupling inference and learning, agents can process observations and update policies in parallel, minimizing bottlenecks. Batching—processing multiple environment steps simultaneously—further enhances throughput, especially on GPU clusters.

Distributed inference allows OpenAI realtime agents to operate across multiple machines, scaling both data collection and policy optimization. This approach is vital in multi-agent systems where thousands of agents interact within persistent environments. Real-world deployment scenarios include autonomous vehicle fleets, large-scale simulations, and online gaming, where latency and scalability are both mission-critical.

The combination of asynchronous inference, distributed training, and dynamic batching ensures that OpenAI realtime agents can adapt to evolving environments while maintaining high performance and reliability.

OpenAI Realtime Agents in Games and Simulations

OpenAI realtime agents have demonstrated groundbreaking capabilities in complex simulations and games. Projects such as OpenAI Five (Dota 2) and Neural MMO illustrate how these agents adapt, learn, and thrive in environments characterized by high dimensionality, persistent states, and emergent multi-agent behavior.

In OpenAI Five, realtime agents mastered the intricacies of Dota 2 through self-play, learning to coordinate, strategize, and respond to human opponents in real-time. Neural MMO pushes the boundaries further, creating open-ended, massively multi-agent environments where agents must forage, fight, and cooperate to survive.

This diagram showcases the continuous feedback loop between OpenAI realtime agents and their environment—a hallmark of real-time decision-making and adaptation.

The persistent, evolving nature of these environments challenges agents to develop sophisticated strategies, robust to both adversarial and cooperative dynamics. Through distributed training and emergent behavior, OpenAI realtime agents achieve levels of performance unattainable by conventional AI.

Implementation: Building Your Own OpenAI Realtime Agent

Developers can start building OpenAI realtime agents using popular deep learning frameworks and reinforcement learning libraries. Below is a step-by-step guide for implementing a basic realtime agent in Python, with tips for optimizing performance and minimizing regret.

Step 1: Define the Environment and Agent Interface

1class SimpleEnv:

2 def reset(self):

3 # Reset environment state

4 return np.zeros(4)

5 def step(self, action):

6 # Apply action, return next_state, reward, done, info

7 next_state = np.random.randn(4)

8 reward = np.random.rand()

9 done = np.random.rand() > 0.95

10 return next_state, reward, done, {}

11

12class RealtimeAgent:

13 def __init__(self, policy):

14 self.policy = policy

15 def act(self, state):

16 return self.policy(state)

17 def update_policy(self, state, action, reward, next_state):

18 # Placeholder for policy update logic

19 pass

20Step 2: Implement the Main Training Loop

1def train_agent(env, agent, episodes=1000):

2 for episode in range(episodes):

3 state = env.reset()

4 done = False

5 while not done:

6 action = agent.act(state)

7 next_state, reward, done, _ = env.step(action)

8 agent.update_policy(state, action, reward, next_state)

9 state = next_state

10Step 3: Tune Performance and Minimize Regret

- Batch Size: Adjust the number of parallel environment steps to maximize throughput.

- Policy Optimization: Use algorithms like RTAC or Soft Actor-Critic for stable, real-time learning.

- Regret Minimization: Regularly evaluate the agent’s policy and adjust the reward function to encourage exploration.

- Scalability: For large environments, implement distributed training and asynchronous inference.

By following these steps and iteratively refining the agent and environment, developers can prototype robust OpenAI realtime agents suitable for a range of real-world applications.

Future Trends for OpenAI Realtime Agents

The future of OpenAI realtime agents lies in larger, more powerful models capable of handling increasingly complex tasks under real-world constraints. Advances in asynchronous inference, distributed policy optimization, and emergent multi-agent cooperation will drive the next generation of real-time AI systems.

Research challenges remain, including improving sample efficiency, reducing training times, and scaling to even larger environments. OpenAI and the broader AI community are actively exploring new algorithms, architectures, and deployment strategies to realize the full potential of realtime agents in dynamic, unpredictable settings.

Open problems such as robust exploration, safety, and seamless integration with human collaborators offer fertile ground for innovation and impact.

Conclusion

OpenAI realtime agents represent a paradigm shift in AI, enabling systems to learn, adapt, and act in dynamic environments with unprecedented speed and intelligence. From games and simulations to real-world deployment, these agents are pushing the frontiers of reinforcement learning, emergent behavior, and scalable AI. As technology advances, OpenAI realtime agents will continue to shape the future of intelligent systems across industries.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ